Keith D. Martin - kdm@media.mit.edu

Youngmoo E. Kim

The Machine Listening Group at the MIT Media Lab

MIT Room E15-401, 20 Ames Street

Cambridge, MA 02139

Popular version of paper 2pMU9

Presented Tuesday afternoon, October 13, 1998

136th ASA Meeting, Norfolk, VA

People are skilled at identifying sounds. We can close our eyes and recognize the phone ringing, water running in the sink, or a friend talking. Many people can listen to a piece of music and name the instruments that are playing. Of course, this ability varies greatly with experience, and performance improves with training, but the ability to identify sounds comes so easily and is so much a part of everyday life that it is hard for us to think about how we do it.

In contrast, computers do not understand sound at all. Although they can store sound recordings in memory, they can not tell us anything meaningful about a recording's contents. If we could somehow teach computers how to recognize sounds, they could interact with us more intelligently. For example, they could turn down the stereo when they hear the phone ring or someone knock at the door. If they understood something about music, they could perhaps help us find songs we like on the radio or act as inordinately patient instructors and accompanists for music students. Teaching computers to recognize musical instruments is one step toward that goal. To reach it, we must first understand what are the important properties of musical sounds -- what makes a violin sound like a violin?

There is a long history of research on musical instrument sounds. Well over one hundred years ago, the great scientist Helmholtz studied musical tones created by air sirens and pipe organs. He attempted to form a theory about musical sound perception by focusing on the "steady" portion of the sound, after the attack and before the decay, where a sound's properties can be characterized most simply. More recently, it has become apparent that the way sounds change over time is extremely important and that there is no simple list of properties that determine "what something sounds like". Instead, there are many important properties, and different sets of properties are important for different sounds.

Our approach has been to model what we know about human hearing. We have given the computer access to several properties that we know people use to recognize sounds. For example, the computer determines the pitch of an instrument sound and can rule out the tuba if the pitch is too high -- or the piccolo if it is too low. The computer is also sensitive to loudness, brightness, and many sound properties that do not have simple names.

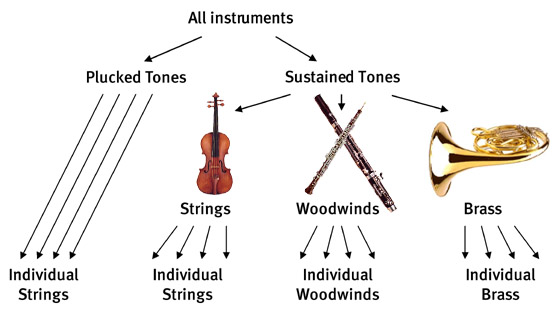

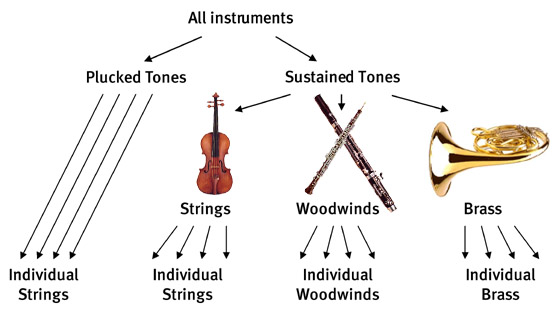

Scientists and philosophers often describe objects in the world as members of taxonomies. The most obvious of these are zoological: Spot is a Dalmatian, which is a kind of dog, which is a kind of mammal, which is a kind of animal, which is a kind of object in the world. When we recognize an object, we do so first at an intermediate level of the taxonomy; we recognize that the object is a dog before we can distinguish its breed or its particular identity. We believe that something similar happens when we recognize a musical instrument. We can tell that an instrument belongs to the string family before we can tell whether it is a violin or a viola. It is easier to distinguish a brass instrument from a woodwind instrument than it is to tell an alto from a tenor saxophone.

Our computer system measures approximately 30 sound properties that are likely to distinguish among the various instrument sounds and uses statistical techniques to make decisions at the various branches of the taxonomy. It learns from examples, discovering which features are most useful for each kind of classification. Like us, it recognizes the instrument family before it recognizes the particular instrument.

The results so far have been impressive. From the 15 orchestral instruments that it has learned to recognize, it classifies new notes correctly approximately 70% of the time. Even more impressive, it gets the instrument family correct nearly 90% of the time. Like many humans, it confuses the violin and viola more often than it does the clarinet and trumpet or the cello and oboe. This performance is as good as that of many people, but skilled musicians are still better than the computer in this narrowly defined task. Of course, in the more general case of recognizing sounds in the environment, an average three-year-old still does better than any computer system we know how to build.