Frank H. Guenther - guenther@cns.bu.edu

Satrajit S. Ghosh

Alfonso Nieto-Castanon

Jason A. Tourville

Jason W. Bohland

Department of Cognitive and Neural Systems

Boston University

677 Beacon Street

Boston, MA 02215

http://cns-web.bu.edu/~guenther

Popular version of paper 2pSC3

Presented Tuesday afternoon, June 4, 2002

143rd ASA Meeting, Pittsburgh, PA

The brain's ability to sort sensory inputs into behaviorally relevant categories is a central aspect of human experience. With vision, for example, we interpret the patterns of light on our retinas in terms of discrete items such as people, chairs, bicycles, and dogs, rather than seeing a continuously changing muddle of light. During spoken conversation, we effortlessly interpret a continuously varying acoustic signal in terms of discrete speech sounds such as words, syllables, and phonemes (consonants and vowels).

In this paper we describe the results of functional brain imaging experiments that help clarify how the brain processes sounds in a categorical fashion. Proper categorization of speech sounds is crucial to spoken communication since incorrectly categorized phonemes can completely change the meanings of words and sentences. For example, if we incorrectly hear the vowel "ee" (as in "beet") rather than the vowel "i" (as in "bit") when someone is saying the word "bit", we will misinterpret the word as "beet" or "beat" and we will likely fail to understand the speaker's message. Such a mistake is possible because the vowels "ee" and "i" are acoustically quite similar to each other -- in other words, they occupy neighboring regions of acoustic space -- and speakers often produce non-ideal versions of these sounds that fall near the boundary between the categories in acoustic space. Our ability to properly categorize sensory signals is aided by the fact that our perceptual systems provide a warped representation of the physical world. For example, it is more difficult to perceive the difference between two examples of the vowel "ee" if they both fall near the center of the "ee" category in acoustic space (that is, if they are both very good examples of "ee") than if they both fall near the boundary between the "i" and "ee" categories. The more general observation that we are perceptually more sensitive to between-category differences than within-category differences is often termed "categorical perception" and has been reported for a variety of acoustic and visual stimuli.

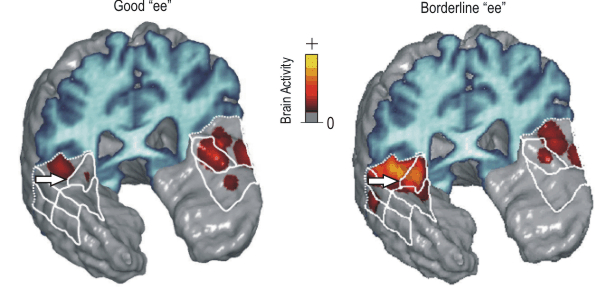

In our first experiment, we used functional magnetic resonance imaging (fMRI) to measure neural activity in the auditory cortical areas of the brain while human subjects listened to either a good example of "ee" (listen to this sound) or a borderline example of "ee" (listen). The figures below show brain activity in the auditory cortical areas while listening to these sounds. The frontal lobe of the brain has been removed in the figures to expose the auditory cortical areas (designated by white boundaries in the figure), which lie on the upper surface of the temporal lobes. We found less activation in the auditory cortical areas when subjects heard the good "ee" (left panel) than when they heard the borderline "ee" (right panel), particularly in and around the primary auditory cortex of the right hemisphere (white arrows). This result indicates that our brains devote more neural resources to processing ambiguous sounds than sounds that are clear examples of a sound category.

In a second experiment, we measured brain activity while subjects listened to non-speech sounds (listen to examples) before and after they underwent one week of training with the sounds. In the training task, the subjects had to learn a new "category" of sounds, arbitrarily defined as sounds from a particular frequency range. After learning the new sound category, decreased brain activation was seen for sounds from the center of the category, as was the case for the vowel category in Experiment 1. The week of categorization training also caused the subjects to become worse at discriminating sounds from the center of the newly learned category; that is, they exhibited categorical perception after training.

We have formulated a neural network model, implemented as a computer simulation, that accounts for these results. According to the model, categorization training leads to a decrease in the number of auditory cortical cells that become active when a central, or prototypical, example of a behaviorally relevant sound category is heard. For example, when infants are exposed to the sounds of their native language, more cells in their auditory cortex become devoted to sounds that fall between phoneme categories than to sounds that fall near the center of a phoneme category. The result of a computer simulation of the model after it is trained with examples of the American English phonemes /r/ and /l/ is shown in the left panel below. This simulation approximates the auditory cortex of an American infant after he/she hears many examples of the sounds /r/ and /l/. The x and y axes of the plots represent physical dimensions of acoustic space (that is, prominent sound frequencies of the acoustic signal), and the vertical axis represents the number of cells in the auditory cortical map for each part of the acoustic space (that is, the size of the cortical representation for each part of acoustic space). After exposure to English speech sounds, there are "holes" with relatively few cells in the auditory cortical representation (indicated by the blue valleys in the plot) for the parts of acoustic space corresponding to prototypical /r/ and /l/ sounds, and there are peaks in between the two sound categories. Since perceptual discriminability is generally better for stimuli with larger cortical representations, these holes lead to poor discriminability of sounds that fall near the center of a learned category.

When the model is trained with Japanese speech sounds instead of English speech sounds (thus approximating the auditory cortex of a native speaker of Japanese), only a single valley develops in the auditory cortical map (right panel above). This is because Japanese has only one phoneme, /r/, that falls in the same part of acoustic space as the two American English phonemes /r/ and /l/. The model thus helps clarify why a Japanese individual who learns English late in life has difficulty distinguishing between the English phonemes /r/ and /l/: the English sounds both fall into the same hole in his/her auditory cortical map, where there are too few cells to accurately distinguish the sounds from each other.

Our experimental results indicate that the brain efficiently allocates cells in its auditory cortical areas based on behavioral considerations. Since it is not very important behaviorally to distinguish between sounds that belong to the same category, brain cells originally devoted to these sounds are re-allocated to represent parts of acoustic space where distinguishing between sounds is more important. The holes in the auditory cortical map that are left behind thus allow better between-category discrimination and better parsing of continuous acoustic signals into phonemes and other behaviorally relevant sound categories.