Keith R. Kluender - krkluend@wisc.edu

Department of Psychology

University of Wisconsin

1202 West Johnson Street

Madison, WI 53706

Michael Kiefte

Dalhousie University

Popular version of paper 2aSC4

Presented Tuesday Morning, November 11, 2003

146th ASA Meeting, Austin, TX

Sound waves that reach the ear have a history. Qualities of a sound reaching the ear depend not only upon the sound source to which one is listening, but also upon the properties of the materials and surfaces sound encounters on its way to the ear. Natural sounds such as speech and music are comprised of many frequencies, some low pitched, some high pitched, and some in between. For example, peaks and valleys in energy across frequencies are used to distinguish speech sounds.

However, as sound waves travel through the environment to your ear, the relative amounts of energy at different frequencies is virtually always colored by the surroundings. Sound energy at some frequencies is reinforced by reflective properties of surfaces, while energy at other frequencies is dampened by acoustic absorbent materials and shapes of objects in the environment. The challenge for listeners is to perceive the true sound source without mistaking effects of surroundings as qualities of the sound to which one is listening.

This challenge for hearing bears close resemblance to the problem of color constancy in vision. Perceived colors of objects depend upon relative amounts of different wavelengths of light reflecting off objects. For example, we perceive short wavelengths as blue and long wavelengths as red, and all colors are combinations of energy at different wavelengths varying from short to long. These combinations are called spectral distributions. The spectral distribution of light entering the eye depends on the type of illumination in addition to reflective qualities of surfaces light encounters on its way to the eyes. For example, sunlight, incandescent light, and fluorescent light produce dramatically different patterns of wavelengths reflected off the same object. Nevertheless, observers see objects as having pretty much the same colors whether viewing them indoors or outdoors across different illuminations. This fact of vision is called "color constancy."

We investigated whether people have the auditory equivalent of visual color

constancy when listening to speech sounds, which we describe here as "auditory

color constancy."

We tested the ability of listeners to compensate for changes in characteristics

of the acoustic environment by using different vowel sounds as auditory objects.

Sometimes, differences between vowel sounds, such as "oo" in "boot"

and "ee" in "beet" are referred to as difference in vowel

color. We used vowel sounds because perception of vowels depends upon differences

both in broad spectral characteristics spanning low to high frequencies, and

in local characteristics such as frequencies of individual spectral peaks.

Local spectral peaks, called formants, are caused by vocal tract resonances, and the frequencies of these peaks vary with changes in vocal tract shape. For example, raising the tongue or jaw lowers the frequency of the first formant (F1). Advancing the tongue toward the mouth opening results in higher frequency second formant (F2). Low frequency F1 is heard as "oo" when accompanied by a low frequency F2 and as "ee" when accompanied by higher frequency F2.

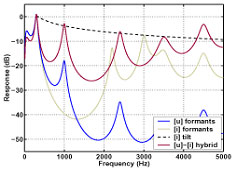

Listeners also use spectrally broad characteristics such as the balance between low- and high-frequency energy to perceive vowels. For example, "oo" typically has more low-frequency energy, resulting in a gross spectral tilt of rapidly declining energy as a function of increasing frequency. In contrast, "ee" has relatively more high-frequency energy, and energy decreases much more gradually with increasing frequency. When listening to isolated vowel sounds, listeners use a combination of both formant frequencies and gross spectral tilt to identify vowels.

We created a matrix of vowel stimuli that varied perceptually from "oo" to "ee" in both of these dimensions: the local characteristics of F2 center frequency and the broad characteristics of spectral tilt. Along the first dimension, the frequency of the F2 peak was changed from low ("oo") to high ("ee") in seven steps. Along the second dimension, gross spectral tilt was adjusted in the same stepwise fashion, varying from a spectral tilt characteristic of "oo" to one characteristic of "ee." Sample stimulus spectra are illustrated in Figure 1.

Figure 1

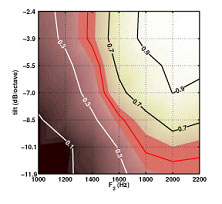

When listeners are asked to identify these vowel sounds as "ee" and "oo" they use both the local (F2) and broad (tilt) characteristics. Listeners' patterns of perception are shown in Figure 2. Clearly, both center frequency of F2 and overall spectral tilt contribute to perception of these vowel sounds.

Figure 2

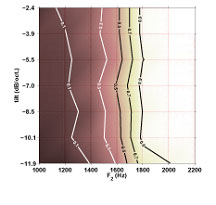

To test for auditory color constancy, we next presented to listeners this matrix of 49 vowel sounds (7 formant frequencies by 7 spectral tilts) preceded by a sentence with reliable spectral properties. The purpose of the preceding sentence is to inform the listener about acoustic characteristics of the listening environment. The sentence was "You will now hear the vowel…" Sentences were then filtered to match the gross spectral tilt of the following vowel. Listeners were asked simply to identify whether they heard the vowels as "ee" or "oo". Data for listener responses to the 49 vowel sounds following a precursor with matched spectral tilt are shown in Figure3.

Figure 3

When long-term spectral tilt was the same for both the carrier sentence and the target vowel, the effect of tilt on vowel perception was effectively negated. Spectral tilt of the target vowel no longer mattered perceptually when tilt of the precursor and target were the same. Judgments of vowel sounds could be predicted entirely on the basis of formant frequencies. When default (non-filtered) sentence contexts are used, and spectral tilt is allowed to change between context and vowel, effects of tilt are restored, yielding data like those in Figure 2. This pattern of performance indicates that tilt, as a predictable spectral property of acoustic context, was effectively cancelled out of perception.

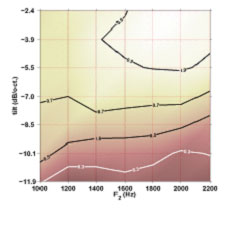

Encoding of predictable characteristics such as spectral tilt requires only the most abstract characterization of the spectrum, with little spectral detail. Next, we carried out an experiment to investigate whether perceptual cancellation of predictable acoustic characteristics is restricted to gross properties such as tilt, or whether perception can also compensate for predictable local spectral properties. Beginning with the same sentence context, we added a single spectral peak which corresponded exactly to the F2 peak of the following vowel. This yielded intact sentences with an additional constant-frequency spectral peak added throughout the entire duration.

Figure 4

Results for 15 listeners shown in Figure 4 illustrate an effect opposite to that for the condition in which spectral tilt was matched between context and vowel. When the center frequency of the additional spectral peak in the context sentence is matched to F2 of the following target vowel, listeners rely largely upon global spectral characteristics (tilt) for identification of the target vowel. This pattern of performance indicates that when a single spectral prominence (F2) becomes a predictable spectral property of acoustic context, it becomes effectively cancelled out of perception. Effects of preceding context are not restricted to gross spectral properties. Perceptual cancellation of predictable spectral characteristics also occurs for local, relatively detailed, characteristics of spectral context.

Two aspects of the context sentence could potentially restrict extrapolation

from these first experiments. First, the context was explicitly interpretable

speech, and one may question whether these effects rely upon listeners' knowledge

of how English speech should sound without spectral distortion. If this were

true, such a priori knowledge could account for some of these perceptual effects.

Second, contexts were always the same phrase. It was perfectly predictable trial

to trial and in this way, may not be representative of acoustic contexts more

broadly. To address these concerns, the same experiments were conducted with

vowels following multiple sentences played backwards and processed in the same

ways as before. These time-reversed contexts were completely unintelligible.

Effects of preceding acoustic context found for the sentence "You will

now hear the vowel…" were closely replicated with these unintelligible

time-reversed precursors. Perceptual cancellation of predictable acoustic context

does not depend upon preceding context being intelligible speech, nor does it

depend upon the context being identical trial to trial.

All of the experiments reported here provide evidence that the auditory system

is quite adept at factoring out reliable characteristics of a listening environment.

These novel findings bear a striking similarity to the phenomenon of visual

color constancy. In order to achieve color constancy, the visual system must

somehow extract reliable spectral properties across the entire image in order

to determine the real colors of objects in a scene. Here, listeners extract

reliable characteristics of the listening context in order to perceive the color

of vowel sounds.