Jeffrey M. Yau – yau@jhu.edu

Johns Hopkins University School of Medicine

Baltimore, MD 21205

J. Bryce Olenczak

University of Maryland School of Medicine

Baltimore, MD 21201

Sliman J. Bensmaia – sliman@uchicago.edu

J. Frank Dammann – frankd@uchicago.edu

Alison I. Weber – aiweber@uchicago.edu

University of Chicago

Chicago, IL 60637

Popular version of paper 3aPP4

Presented Wednesday morning, May 25, 2011

161st ASA Meeting, Seattle, Wash.

When we listen to music, special nerve cells in our ears detect vibrations of air molecules that originate from the loudspeaker. Our brain then interprets the pattern of these sound waves as music. Our ability to perceive all sounds, be it music, speech, or noise, depends on our ears detecting vibrations carried through the air and our brain interpreting these sound waves.

However, we do not rely on detecting and interpreting vibrations in hearing only. Because of special nerve cells in our skin, we are also very sensitive to vibrations using our sense of touch. These nerve cells allow us to perceive the vibration of our cell phone when it rumbles in ‘Silent mode’. As we drive on the roadway, we can feel the smoothness of the pavement through vibrations of the steering wheel because of these cells. Notably, these nerve cells underlie our ability to experience finely textured surfaces. For example, if you move your finger across swatches of felt and wool, you can easily identify the two fabrics. This remarkable sensitivity reflects your brain’s ability to interpret distinct patterns of vibrations that are produced in your skin as you explore the fabrics with your finger.

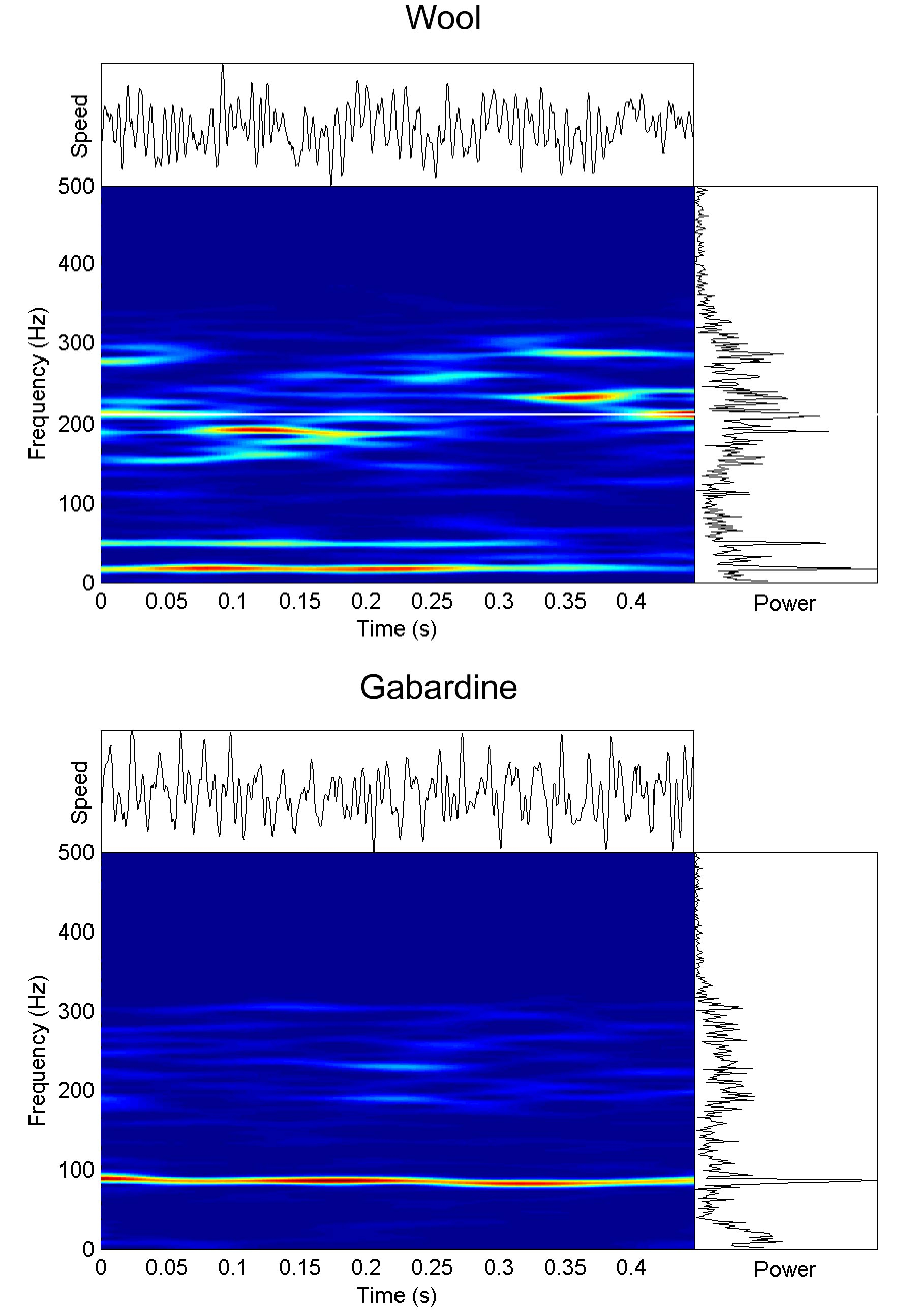

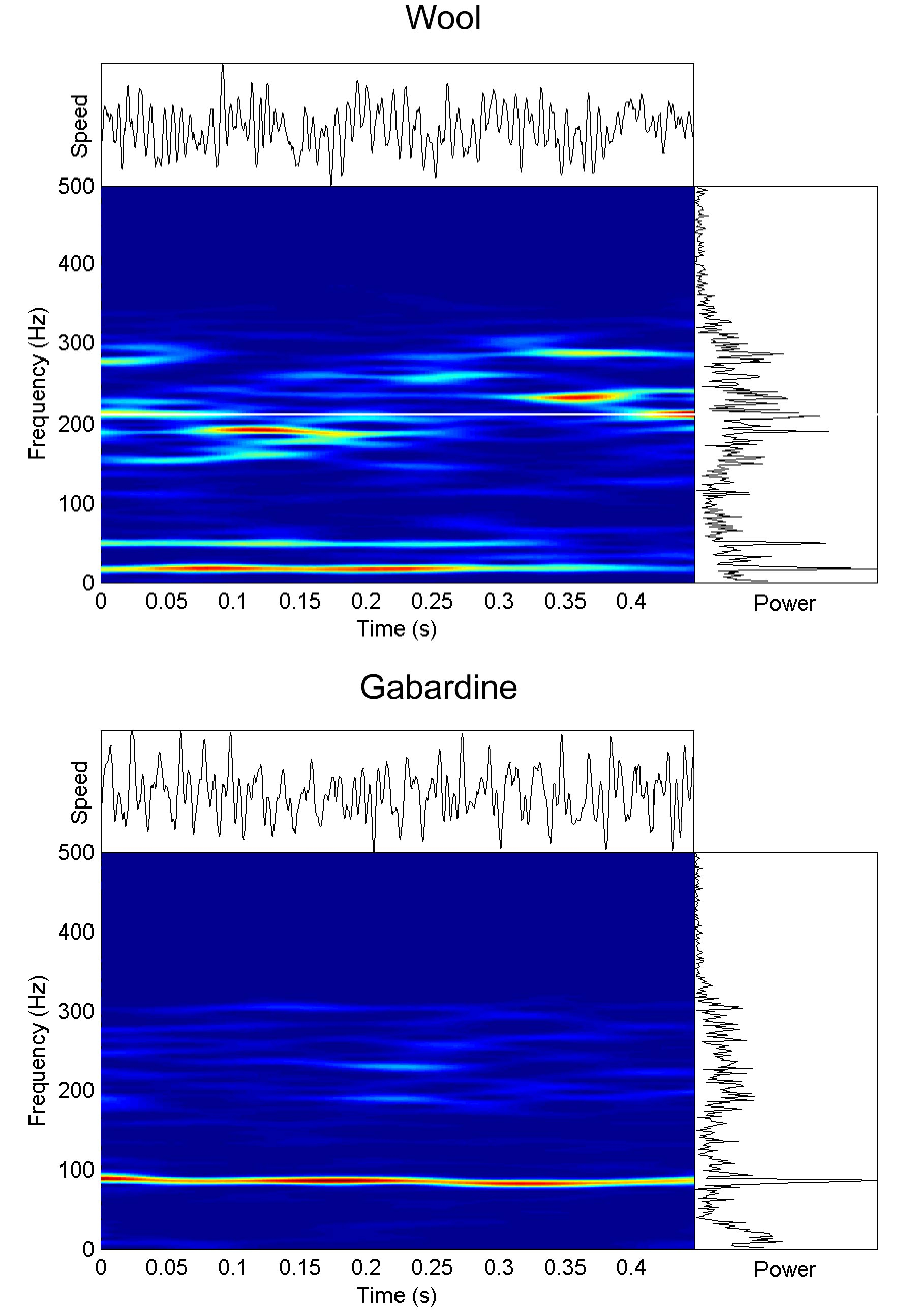

Figure 1. Example texture spectrograms. As one passes his finger over wool (top) and gabardine (bottom) swatches, distinct patterns of vibrations are generated in the skin (upper marginal plots). Analysis of the power spectra (right marginal plots) enables us to identify and discriminate textures on the basis on frequency information. We similarly rely on spectral analysis in processing acoustics.

Although we often consider the different senses as operating independently, we typically experience stimulation of multiple senses at the same time. To appreciate a multisensory experience involving touch and audition, one can place his hand on his own Adam’s Apple while humming or speaking aloud. The vibrations that are produced in your vocal chords simultaneously excite nerve cells in your hand and in your ear resulting in an audio-tactile experience.

Figure 2. Classic example of audio-tactile perception. In old Western movies, a cowboy would put his ear against a railroad track to listen for a train in the distance. In these scenes, the approach of the train was telegraphed by vibrations passed along the steel tracks. As the train nears, the train can be detected both from vibrations in the air (i.e. sound) and vibrations along the track. Photo courtesy of Eric Carlson.

Because both audition and touch are sensitive to environmental oscillations, we hypothesized that the two interact during the perception of vibrations. We designed a series of human behavioral experiments to test whether touch and audition interact and how specific these interactions might be.

We first asked participants to identify and compare vibrations delivered to their finger. This allowed us to determine how well participants could perceive vibrations through their sense of touch alone. We next asked participants to compare the same vibrations with their finger, but this time while presenting them with sounds through headphones. We reasoned that if audition interacted with touch, participants’ ability to perceive vibrations through touch would change as a result of hearing sounds through headphones; however, if the two senses were independent, hearing sounds would not affect touch perception at all.

We found that participants’ ability to perceive vibrations through touch did change if they simultaneously heard sounds. The sounds that subjects heard systematically biased their tactile perception of vibration frequency (i.e., a vibration of a given frequency feels like it has a higher frequency if it is paired with a high frequency sound). This audio-tactile interaction appears to be specific to frequency because sounds did not affect how intense a vibration felt (i.e., a vibration felt equally intense regardless of whether it was accompanied by a sound). In short, sound biased the identity of vibrations, but not their intensity.

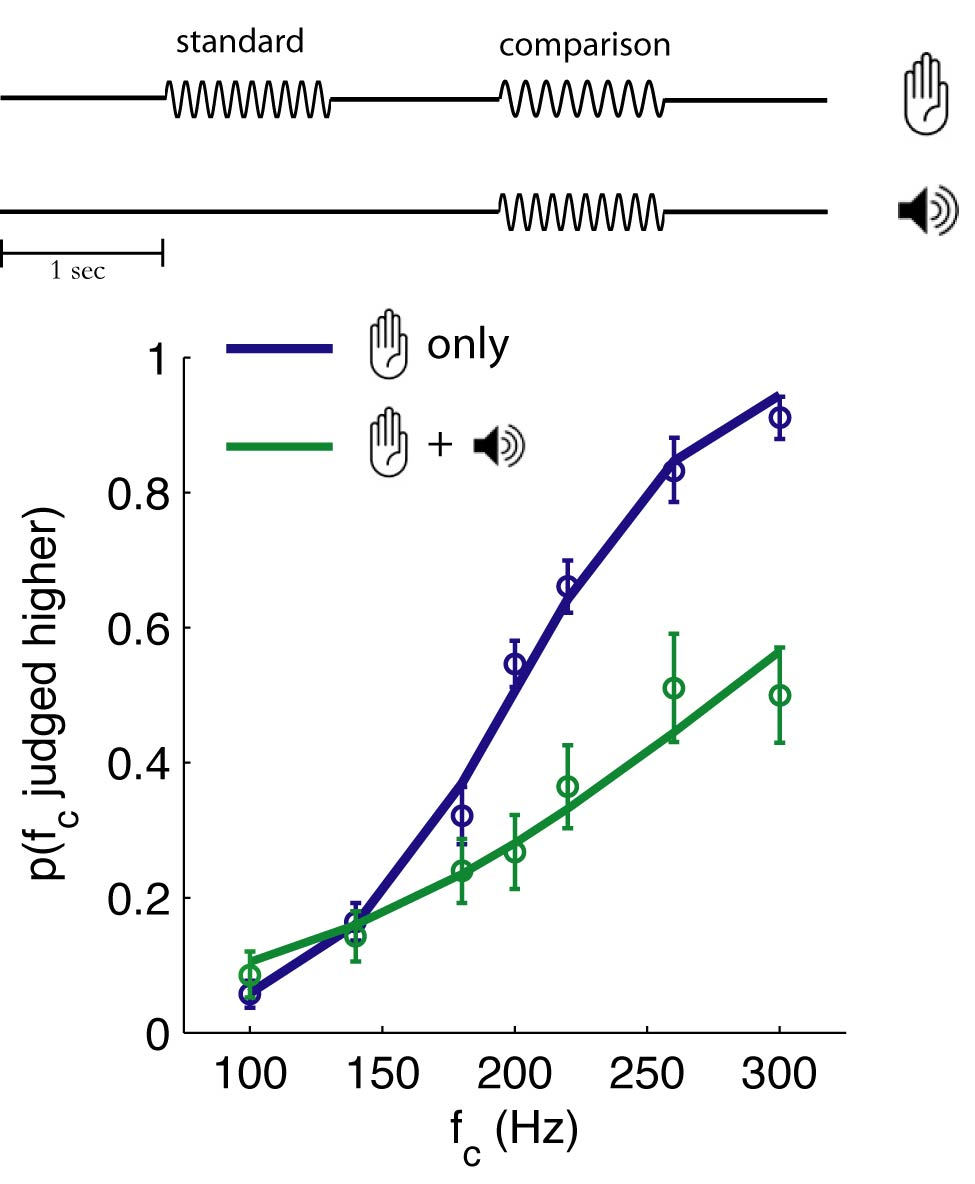

Figure 3. Audio-tactile interactions in frequency perception. Participants compared the frequency of two vibrations delivered to their right index finger. The data indicate the probability a vibration at a comparison frequency (fC) was judged to be higher than the 200-Hz standard frequency. The blue trace shows baseline group performance measured in the absence of auditory distraction. The green trace shows how performance is changed when subjects performed vibration discriminations while simultaneously hearing a 100-Hz auditory tone. Sounds biased tactile perception such that a 300-Hz vibration felt like a 200-Hz vibration when combined with a 100-Hz tone.

We then repeated the experiments, but this time participants identified and compared two sounds while ignoring vibrations delivered to their finger. Just as sounds biased the identity of vibrations, vibrations also biased the identity of sounds. This indicates that audio-tactile perceptual interactions in the frequency domain are reciprocal – either sensory modality can bias perception in the other. In contrast, although we found that sounds did not affect the perceived intensity of vibrations, vibrations did bias the loudness of sounds (i.e., a sound seemed louder if a vibration was simultaneously felt on the finger).

Together these results imply that audition and touch interact in very specific ways. What you hear biases the identity of vibrations you feel, but not how intense they feel. What you feel, however, biases both the identity and loudness of sounds you hear. This pattern of results indicates that there may be a shared representation of temporal frequency in the brain. Additionally, our results imply that multisensory interactions in acquiring information about frequency and intensity rely on different neural mechanisms (which is also supported by the results of control experiments showing that pitch and loudness interactions are differentially sensitive to stimulus timing).

Our results indicate that the crosstalk between audition and touch is very specific with regard to frequency. This makes sense because both modalities are sensitive to environmental oscillations, and the brain may have evolved common circuits for processing this information.