Sona Patel * – Sona.Patel@unige.ch

Tanja Banzigar

Klaus R. Scherer *

* NCCR Swiss Center for Affective Sciences,

University of Geneva

7 Rue des Battoirs, 1205 Geneva, Switzerland

Popular version of paper 5aSCb31

Presented Friday morning, November 4, 2011

162nd ASA Meeting, San Diego, Calif.

OVERVIEW

We rarely think about the amount of information we communicate without explicitly saying it. When a person is speaking, we are often able to estimate the person’s age, gender, physical health, interpersonal attitude, social class, and maybe regional dialect just from the person’s intonation or prosody. More importantly, we are able to identify the person’s mood and emotional states. Emotional information is used in everything from decision-making (e.g., selecting a job based on passion instead of salary) to modifying behavior (e.g., hugging a friend who “sounds” sad).

Emotions are also used to establish personal connections. In an ABC article by Jake Tapper and Jessica Hopper regarding President Obama reactions to the oil leakage in the Gulf, Presidential historian Douglas Brinkley is quoted as saying, “The American people want to feel that the president is connecting emotionally to them.”

THE EMOTION COMMUNICATION PROCESS

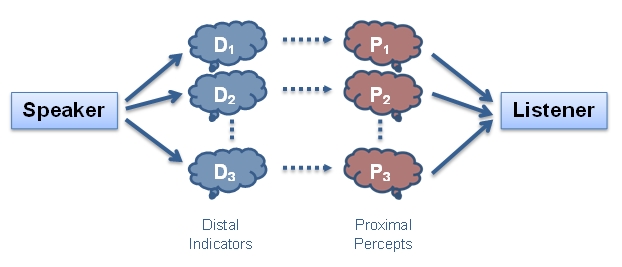

To understand the process of emotion communication, it is best to use a framework that considers both the person and the listener. The modified version of the Brunswikian Lens Model proposed by Klaus Scherer can provide such a framework (shown in Figure 1). As a person speaks, the voice production mechanisms change in complex ways to produce a speech signal. This signal can be broken down into a series of acoustic parameters such as fundamental frequency, intensity, and so forth. While certain acoustic changes convey phonemic sounds that make up words and sentences (the verbal or semantic message), others convey prosodic information. It is the prosodic changes that cue the speaker’s emotion and are referred to as “distal cues” in the model, as they are distant from the observer. Once the acoustic cues reach the ears of the listener, they are then modified by the listener’s auditory system as the listener perceives the emotion. In the framework of the model shown in Figure 1, the cues that the listener perceives are called the proximal cues (i.e., close to the observer). For example, the proximal cue for fundamental frequency would be perceived pitch.

Figure 1. The modified Brunswikian Lens Model of emotion communication in speech (modified from Scherer, 2003).

Although the proximal cues are based on the objectively measured distal acoustic cues, it is important to note that each proximal percept in the observer does not necessarily correspond with a unique distal indicator (as indicated by the dotted arrows). The proximal percepts may be modified by either the speech transmission channel (such as distance or noise) or the structural characteristics of the perceptual organs and the sound transduction process (for example, selective enhancement of certain frequency bands).

OUR APPROACH

Typically scientists have studied the distal acoustic indicators of emotion or the “production side” of the model. In these studies, an acoustic analysis is performed on emotional speech samples, followed by statistical analyses to see whether any of the acoustic features can differentiate between any two emotions. One downside to this method is that the listener is not accounted for in the results. Even if some acoustic features are found to be different for a pair of emotions, it is not clear whether the listener actually uses these acoustic features in perceiving differences in the emotions.

An alternate method is to try to break down the perceptual side into its constituent parts, and relate these perceived qualities to the acoustic features. The Brunswikian lens model is well-suited for this approach. For this, it is necessary to directly measure the proximal cues. This is no easy task because relying on the listeners’ verbal report of their impressions of the speaker’s voice and speech causes problems in interpreting the data across listeners, since the terms used to describe the qualities vary widely. In this study, we attempt develop a test instrument for obtaining proximal voice percepts.

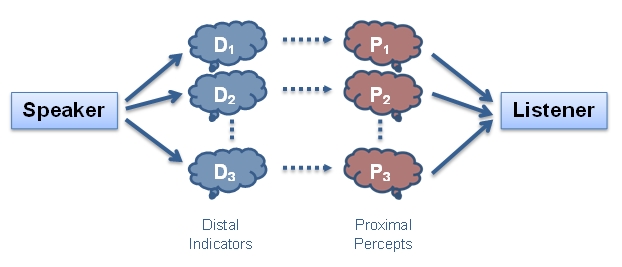

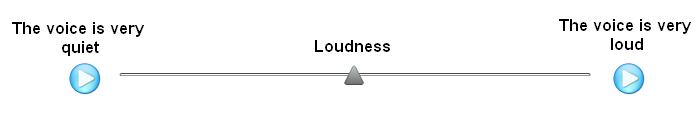

The proximal measures are obtained using listening tests to collect subjective impressions of the vocal qualities. We used eight visual-analog scales to measure prosodic and voice quality characteristics including pitch, intensity, intonation, speech rate, articulation, stability, harshness, and sharpness and also four scales to measure the emotions dimensions (arousal, valence or pleasantness, power, and intensity). Examples of the loudness and sharpness scales are shown in Figures 2 and 3.

Figure 2. Example of the user interface for rating the “loudness” of emotional speech (and corresponding example sounds) used in the perceptual test.

Figure 3. Example of the user interface for rating the “sharpness” of emotional speech (and corresponding example sounds) used in the perceptual test.

RESULTS

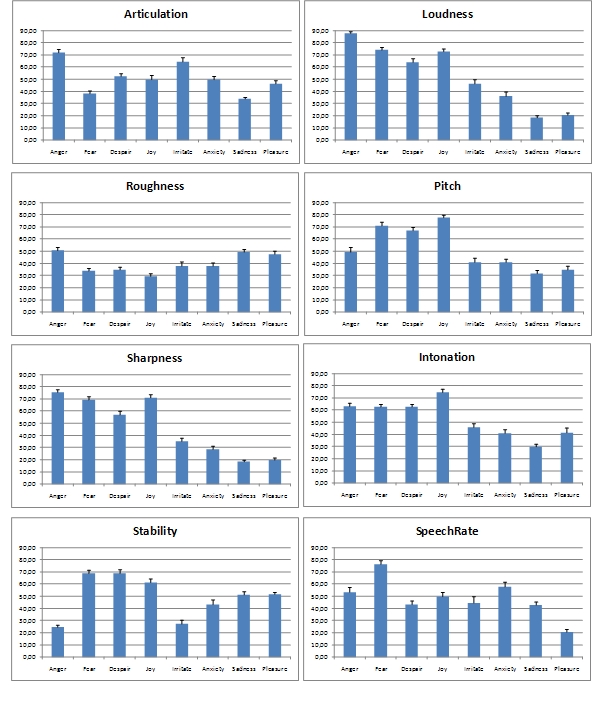

Our first results show that listeners were reliably able to use these scales to judge emotions. The most reliable scales were loudness and speech rate, and the least reliable were roughness and articulation.

Figure 4. Example of the user interface for rating the “sharpness” of emotional speech (and corresponding example sounds) used in the perceptual test (adapted from Patel, Banzigar, & Scherer, In preparation).

Also, through statistical regression analyses we have found that some of these scales can be described by one or more of the distal acoustic cues commonly used in emotions research. These results provide the next step towards modeling the entire process of communicating emotions. Considering the wide array of circumstances in which the communication of emotions occurs, an understanding of how emotions are communicated in in speech and voice is important for this ultimate goal.

SOURCES

Scherer, K.R. (2003). Vocal communication of emotion: A review of research paradigms. Speech Communication, 40, 227-256.

Scherer, K.R. (In Preparation). A rating scale for emotional speech: Linking acoustics to perception.