Spencer Kelly – skelly@colgate.edu

Department of Psychology

Center for Language and Brain

Colgate University

Hamilton, NY, USA

Meghan Healey – meghan.healey@nih.gov

Language Section, National Institute on Deafness and Other Communication Disorders

National Institute of Health

Bethesda, MD, USA

Asli Ozyurek – Asli.Ozyurek@mpi.nl

Radboud University Nijmegen

Max Planck Institute for Psycholinguistics

Donders Institute for Cognition, Brain and Behavior

Nijmegen, The Netherlands

Judith Holler – Judith.holler@mpi.nl

Max Planck Institute for Psycholinguistics,

Nijmegen, The Netherlands

and

School of Psychological Sciences

University of Manchester

Manchester, UK

Popular version of paper 2aSC15

Presented Tuesday morning, May 15, 2012

163rd ASA Meeting, Hong Kong

Background

Hand gestures are an ever-present part of spoken communication. The two together, gestures and spoken words, are thought to form a single connected system of meaning when producing language (McNeill, 1992), and it appears that this connection carries over to understanding language as well (Kelly, Ozyurek & Maris, 2010). However, it is unknown whether hand gestures truly have a special relationship with speech, or whether they are processed like any other visual actions that accompany speech. To explore this question, we compared the extent to which speech is linked with gestures (e.g., depicting stirring a cup of coffee, with objects being absent) versus actual actions on objects (e.g., actually stirring a cup of coffee). Because actions on objects are often used in a non-communicative way (e.g., cutting vegetables for dinner while talking about one's day at work), it is likely that they have a lesser impact on how people hear and understand speech than co-speech gestures, which are more commonly used for communication.

Method

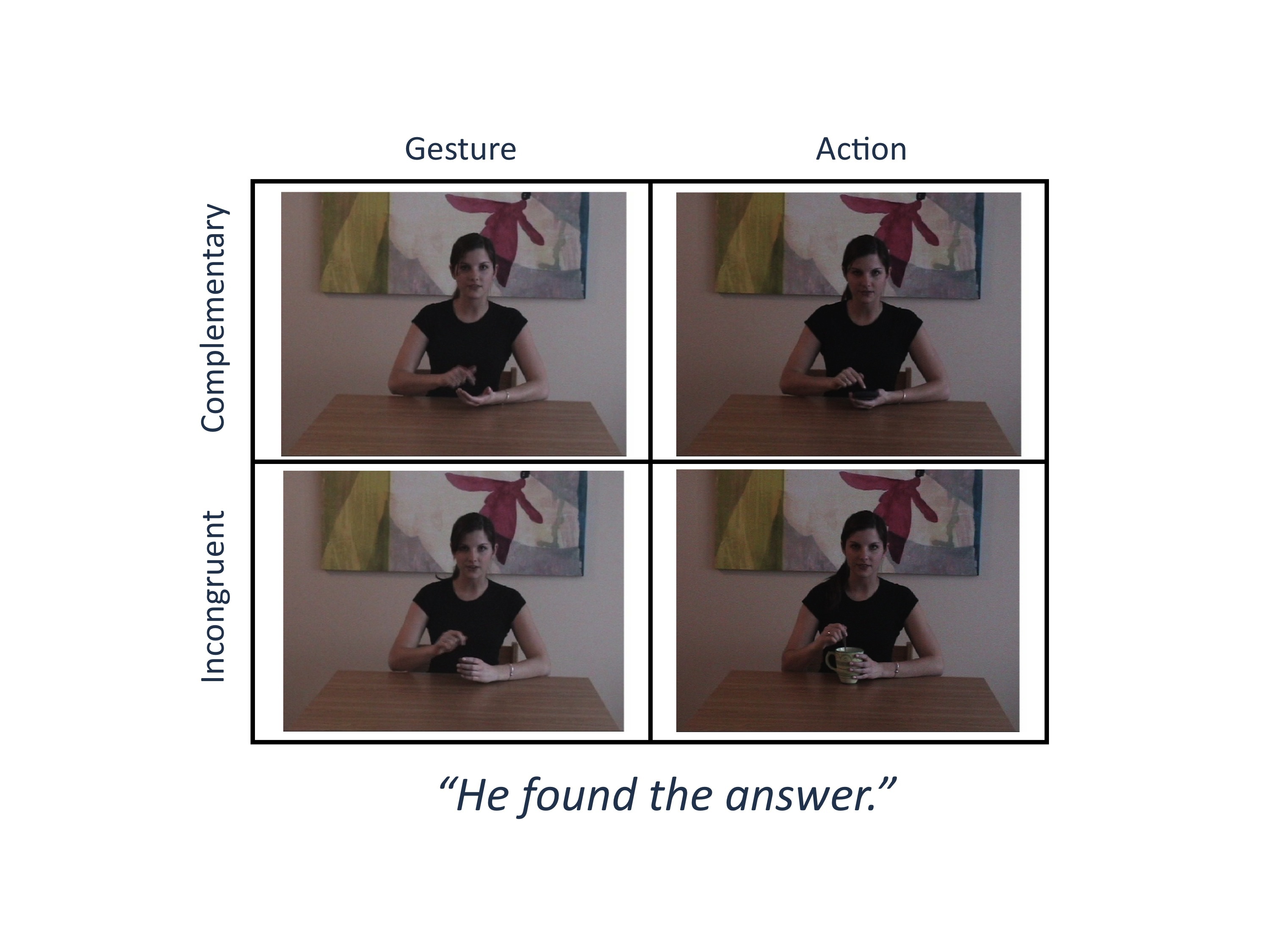

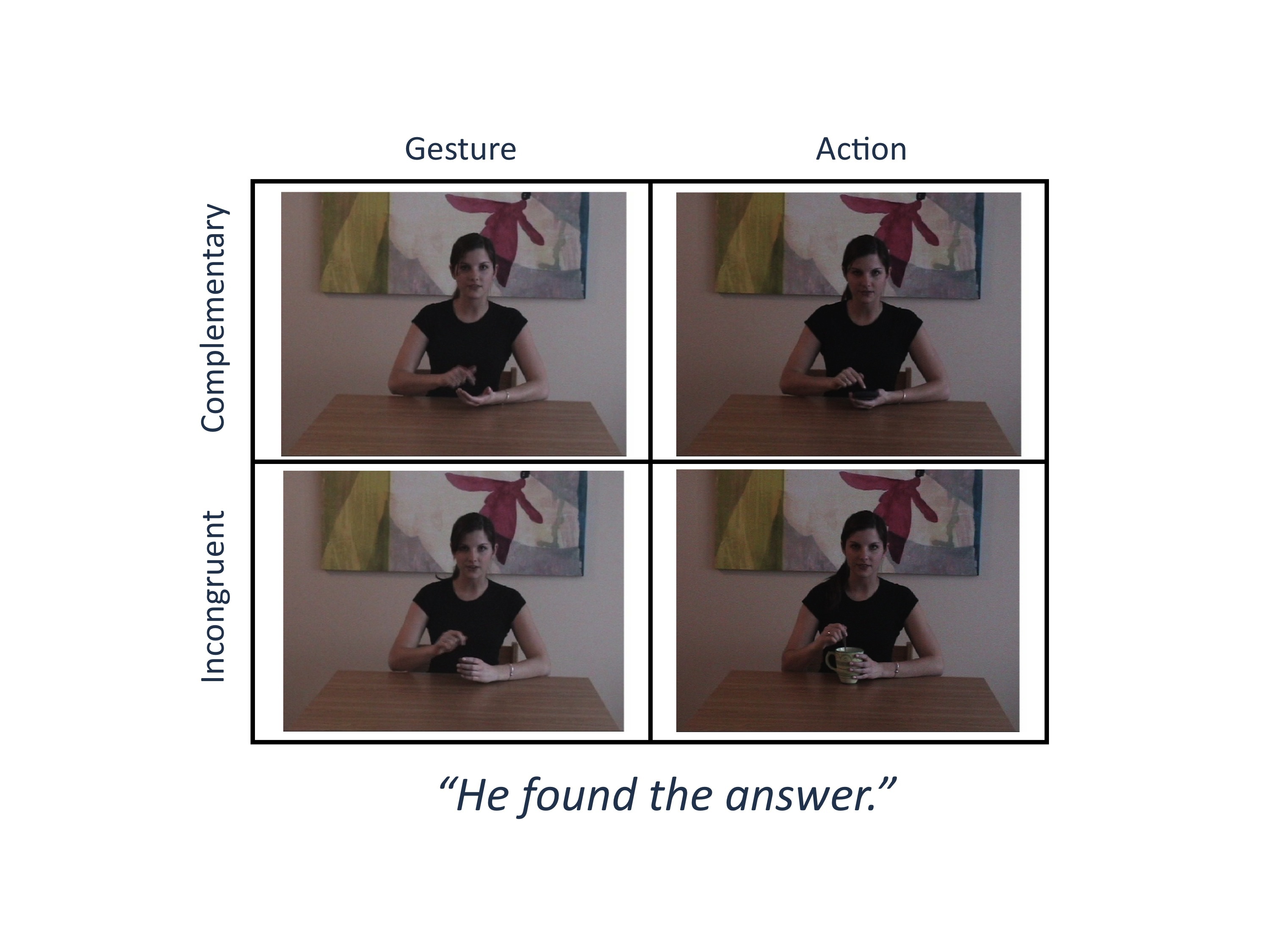

Eighteen people watched short video clips of an actor who produced speech with gestures or speech with actions on objects. The relationship between the speech gesture/action was varied, such that they were either complementary or incongruent. For example, accompanying the sentence, "He found the answer," was a complementary gesture indicating that the answer had been obtained by use of a calculator. The complementary action condition was identical except the pantomimed object was present (in the example above, the speaker used an actual calculator). To create the incongruent conditions, the gesture/actions were paired with speech that did not make sense. For example, the incongruent gesture/action for, "He found the answer," was gesturing vs. actually stirring a spoon. See the figure below for a visual depiction of the four conditions.

While people watched these videos, we measured the brain's electrical response to the words said in the sentences. Specifically, we were looking for two electrical responses-one early and one late-that would show: 1) early auditory processing of the speech sounds and 2) late processing of the meaningfulness of the spoken words in context.

Results

Early Effect

For the early effect, participants paid more auditory attention to the words accompanied by actions versus gestures, for both the complementary and incongruent relationship. One interpretation of this finding is that gestures are a more natural context for speech than actions, with words accompanied by gestures being more expected and easier to process than words accompanied by actions.

Late Effect

For the late effect, participants had more difficulty processing words accompanied by incongruent versus complementary gestures, but there were no differences for actions. In other words, it appears that incongruent gestures made understanding the meaning of the words more difficult than complementary gestures, but the differing relationships between actions and speech did not influence the brain's understanding of the words. This suggests that gestures may have a stronger connection to speech than actions when understanding language.

Conclusion

The findings suggest that gestures are different than actions on objects and perhaps have a special relationship with the speech they accompany. One possibility for why gestures may receive special attention is that whereas hand gestures are specifically designed for communication, actions on objects have been merely borrowed for it. For example, many have argued that gestures were originally built over evolution as part of the language and communication process (Hewes, 1973; McNeill, 1992; Rizzolatti & Arbib, 1998; Tomasello, 2008), whereas common actions on objects-which no doubt predate gestures in their evolutionary emergence-initially served a more non-communicative function, for example, to manually manipulate some aspect of the physical world to accomplish non-communicative goals (e.g., to eat, drink or use tools). In this way, it is possible that gesture is not just a powerful and ever-present piece of visual information that routinely affects speech processing and understanding in face-to-face communication, but it may be something even more-it may indeed be a special type of action that is uniquely linked with speech, making it a fundamental part of human language.

References

Hewes, G. W. (1973). Primate communication and the gestural origins of language. Current Anthropology, 14, 5-24.

Kelly, S. D., Ozyurek, A., & Maris, E. (2010). Two sides of the same coin: Speech and gesture mutually interact to enhance comprehension. Psychological Science, 21(2), 260-267.

McNeill, D. (1992). Hand and mind: What gestures reveal about thought. Chicago: University of Chicago Press.

Rizzolatti, G., & Arbib, M. A. (1998). Language within our grasp. Trends in Neurosciences, 21(5), 188-194.

Tomasello, M. (2008). Origins of Human Communication. Cambridge: MIT Press.