1aPAb1 – On the origin of thunder: reconstruction of lightning flashes, statistical analysis and modeling

Arthur Lacroix – arthur.lacroix@dalembert.upmc.fr

Thomas Farges –thomas.farges@cea.fr

CEA, DAM, DIF, Arpajon, France

Régis Marchiano – regis.marchiano@sorbonne-universite.fr

François Coulouvrat – francois.coulouvrat@sorbonne-universite.fr

Institut Jean Le Rond d’Alembert, Sorbonne Université & CNRS, Paris, France

Popular version of paper 1aPAb1

Presented Monday morning, November 5, 2018

176th ASA Meeting, Vancouver, Canada

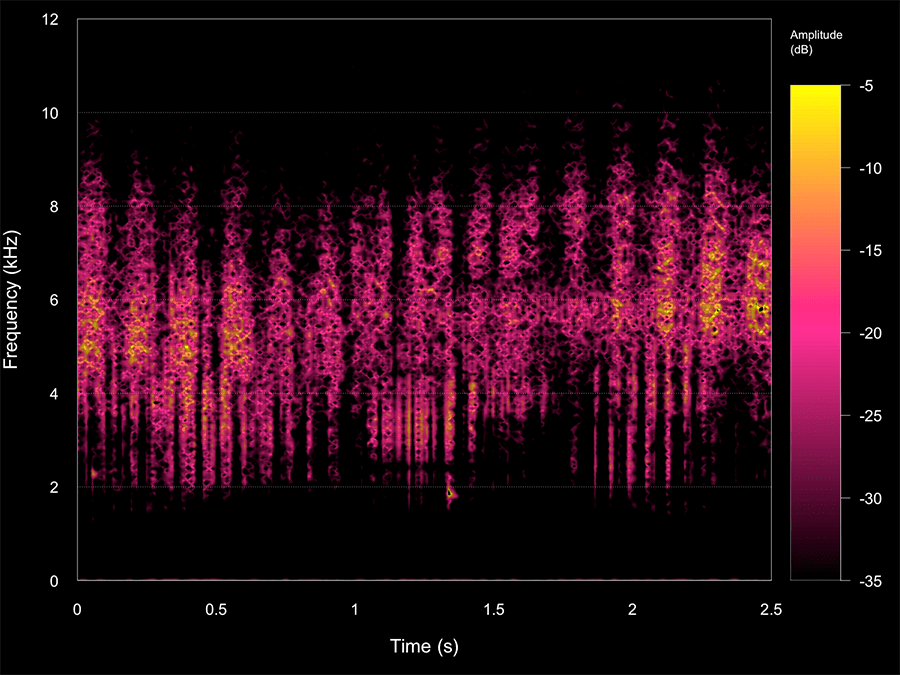

Thunder is the sound produced by lightning, a frequent natural phenomenon occurring in the mean about 25 times per second somewhere on the Earth. The Ancients associated thunder with the voice of deities, though old Greek scientists like Aristotle invoked some natural causes. Modern science established the link between lightning and thunder. Although the sound is audible, thunder also contains an infrasonic frequency component, non-audible by humans, whose origin remains controversial. As part of the European project HyMeX on the hydrological cycle of the Mediterranean region, thunder was recorded continuously by an array of four microphones during two months in 2012 in Southern France, in the frequency range of 0.5 to 180 Hz covering both infrasound and audible sound. In particular, 27 lightning flashes were studied in detail. By measuring the time delays between the different parts of the signals at different microphones, the direction from which thunder comes is determined. Dating the lighting ground impact and therefore the emission time, the detailed position of each noise source within the lightning flash can be reconstructed. This “acoustical lightning photography” process was validated by comparison with a high frequency direct electromagnetic reconstruction based on an array of 12 antennas from New Mexico Tech installed for the first time in Europe. By examining the altitude of the acoustic sources as a function of time, it is possible to distinguish, within the acoustical signal, the part that originates from the lightning flash channel connecting the cloud to the ground, from the part taking place within the ground. In some cases, it is even possible to separate several cloud-to-ground branches. Thunder infrasound comes unambiguously mainly from return strokes linking cloud to ground. Our observations contradict one of the theories proposed for the emission of infrasound by thunder, which links thunder to the release of electrostatic pressure in the cloud. On the contrary, it is in agreement with the theory explaining thunder as resulting from the sudden and intense air compression and heating – typically 20,000 to 30,000 K – within the lightning stroke. The second main result of our observations is the strong dependence of the characteristics of thunder with the distance between the lightning and the observer. Although a common experience, this dependence has not been clearly demonstrated in the past. To consolidate our data, a theoretical model of thunder has been developed. A tortuous shape for the lightning strike between cloud and ground is randomly generated. Each individual part of this strike is modeled as a giant spark, solving the complex equations of hydrodynamics and plasma physics. Summing all contributions, the lightning stroke is transformed into a source of noise which is then propagated down to a virtual listener. This simulated thunder is analyzed and compared to the recordings. Many of our observations are qualitatively recovered by the model. In the future, this model, combined with present and new thunder recordings, could potentially be used as a lightning thermometer, to directly record the large, sudden and yet inaccessible temperature rise within the lightning channel.