1pSC12 – Is it blow or below? How well can second-language learners distinguish between words that differ in syllable count?

Keiichi Tajima – tajima@hosei.ac.jp

Dept. of Psychology

Hosei University

2-17-1 Fujimi, Chiyoda-ku

Tokyo 102-8160

Japan

Stefanie Shattuck-Hufnagel – sshuf@mit.edu

Research Laboratory of Electronics

Massachusetts Institute of Technology

77 Massachusetts Avenue

Cambridge, MA 02139

USA

Popular version of paper 1pSC12

Presented Sunday afternoon, June 25, 2017

173rd ASA Meeting, Boston

Learning pronunciation and listening skills in a second language is a challenging task. Languages vary not only in the vowels and consonants that are used, but also in how the vowels and consonants combine to form syllables and words. For example, syllables in Japanese are relatively simple, often consisting of just a consonant plus a vowel, but syllables in English tend to be more complex, containing several consonants in a row. Because of these differences, learning the syllable structure of a second language may be difficult.

For example, when Japanese learners of English pronounce English words such as “stress,” they often pronounce it as “sutoresu,” inserting what are called epenthetic vowels (underlined) between adjacent consonants and at the end of words [1]. Similarly, when asked to count the number of syllables in spoken English words, Japanese learners often over-estimate the number of syllables, saying, for example, that the one-syllable word, play, contains 2 syllables [2].

This may be because Japanese listeners “hear” an epenthetic vowel between adjacent consonants even if no vowel is physically present. That is, they may hear “play” as something like “puh-lay,” thus reporting to have heard two syllables in the word. In fact, a study has shown that when Japanese speakers are presented with a nonsense word like “ebzo,” they report hearing an “illusory” epenthetic vowel between the b and z; that is, they report hearing “ebuzo” rather than “ebzo,” even though the vowel u was not in the speech signal [3].

These tendencies suggest the possibility that Japanese learners may have difficulty distinguishing between English words that differ in syllable count, or the presence or absence of a vowel, e.g. blow vs. below, sport vs. support. Furthermore, if listeners tend to an extra vowel between consonants, then they might be expected to misperceive blow as below more often than below as blow.

To test these predictions, we conducted a listening experiment with 42 Japanese learners of English as participants. The stimuli consisted of 76 pairs of English words that differed in the presence or absence of a vowel. Each pair had a “CC word” that contained a consonant-consonant sequence, like blow, and a “CVC word” that had a vowel within that sequence, like below. On each trial, listeners saw one pair of words on the computer screen, and heard one of them through headphones, as pronounced by a male native English speaker. The participants’ task was to pick which word they think they heard by clicking on the appropriate button. A control group of 14 native English participants also took part in the experiment.

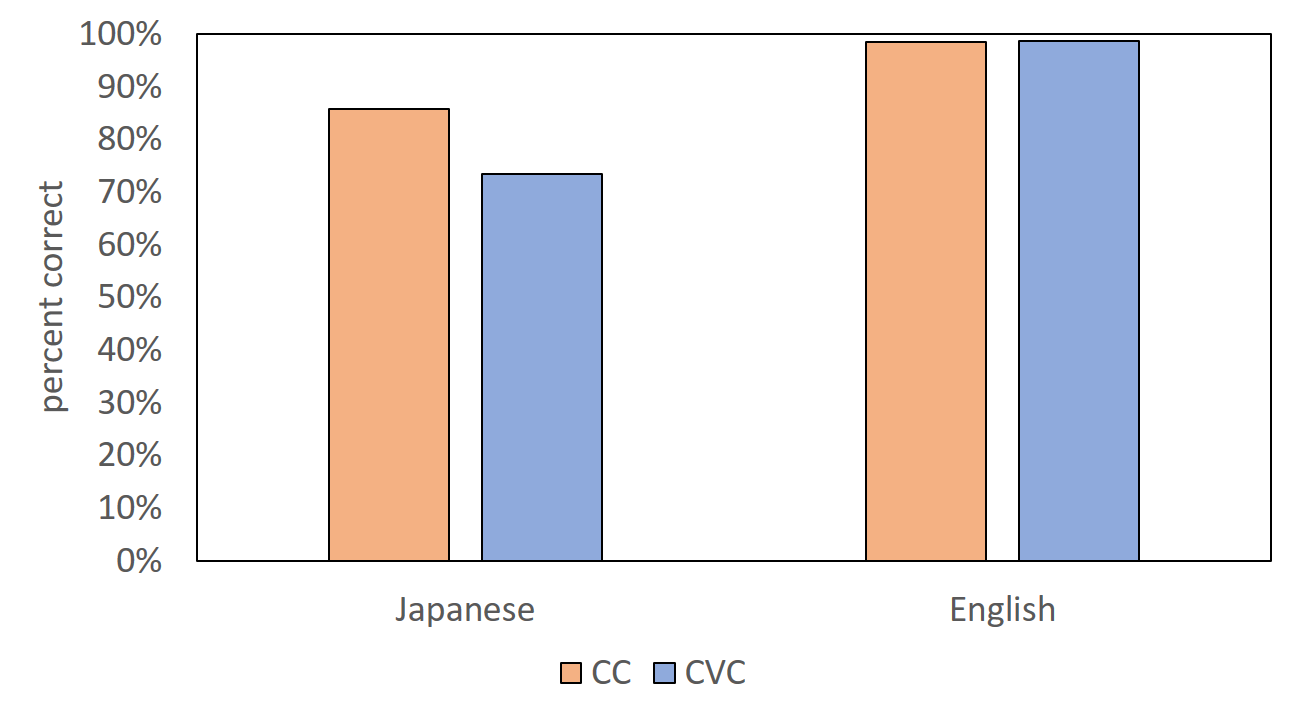

Figure 1 shows the percentage of correct responses for CC words and CVC words for the Japanese learners of English (left half) and for the native English listeners (right half). The right half of Figure 1 clearly shows that the native listeners were very good at identifying the words; they were correct about 98% of the time. In contrast, the left half of Figure 1 shows that the Japanese listeners were less accurate; they were correct about 75~85% of the time. Interestingly, their accuracy was higher for CC words (85.7%) than for CVC words (73.4%), contrary to the prediction based on vowel epenthesis.

Figure 1. Percent correct identification rate for CC words, e.g. blow, and CVC words, e.g. below, for Japanese learners of English (left half) and native English listeners (right half). Credit: Tajima/ Shattuck-Hufnagel

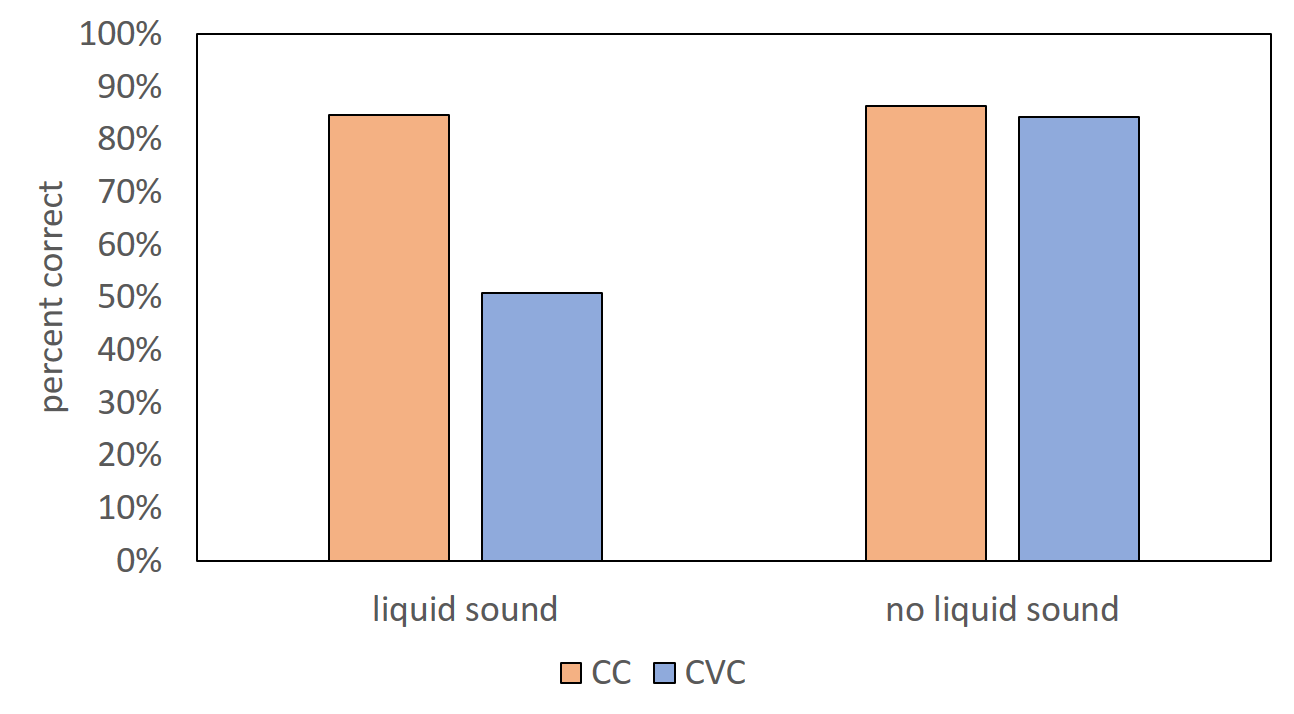

To find out why Japanese listeners’ performance was lower for CVC words than for CC words, we further analyzed the data based on phonetic properties of the target words. It turned out that Japanese listeners’ performance was especially poor when the target word contained a particular type of sound, namely, a liquid consonant such as “l” and “r”. Figure 2 shows Japanese listeners’ identification accuracy for target words that contained a liquid consonant (left half), like blow-below, prayed-parade, scalp-scallop, course-chorus, and for target words that did not contain a liquid consonant (right half), like ticked-ticket, camps-campus, sport-support, mint-minute.

The left half of Figure 2 shows that while Japanese listeners’ accuracy for CC words that contained a liquid consonant, like blow, prayed, was about 85%, their accuracy for the CVC counterparts, e.g. below, parade, was about 51%, which is at chance (guessing) level. In contrast, the right half of Figure 2 shows that Japanese listeners’ performance on words that did not contain a liquid sound was around 85%, with virtually no difference between CC and CVC words.

Figure 2. Percent correct identification rate for word pairs that contained a liquid consonant, e.g. blow-below, prayed-parade (left half) and word pairs that did not contain a liquid consonant, e.g. ticked-ticket, camp-campus. Credit: Tajima/ Shattuck-Hufnagel

Why was Japanese listeners’ performance poor for words that contained a liquid consonant? One possible explanation is that liquid consonants are acoustically similar to vowel sounds. Compared to other kinds of consonants such as stops, fricatives, and nasals, liquid consonants generally have greater intensity, making them similar to vowels. Liquid consonants also generally have a clear formant structure similar to vowels, i.e. bands of salient energy stemming from resonant properties of the oral cavity.

Because of these similarities, liquid consonants are more confusable with vowels than are other consonant types, and this may have led some listeners to interpret words with vowel + liquid sequences such as below and parade as containing just a liquid consonant without a preceding vowel, thus leading them to misperceive the words as blow and prayed. Given that the first vowel in words such as below and parade is a weak, unstressed vowel, which is short and relatively low in intensity, such misperceptions would be all the more likely.

Another possible explanation for why Japanese listeners were poorer with CVC words than CC word may have to do with the listeners’ familiarity with the target words and their pronunciation. That is, listeners may have felt reluctant to select words which they were not familiar with or did not know how to pronounce. When the Japanese listeners were asked to rate their subjective familiarity with each of the English words used in this study using a 7-point scale, from 1 (not familiar at all) to 7 (very familiar), it turned out that their ratings were higher on average for CC words (4.8) than for CVC words (4.1).

Furthermore, identification accuracy showed a moderate positive correlation (r = 0.45) with familiarity rating, indicating that words that were more familiar to Japanese listeners tended to be more correctly identified. These results suggest that listeners’ performance in the identification task was partly affected by how familiar they were with the English words.

Put together, the present study suggests that Japanese learners of English indeed have difficulty correctly identifying spoken English words that are distinguished by the presence vs. absence of a vowel. From a theoretical standpoint, the results are intriguing because they are not in accord with predictions based on vowel epenthesis, and suggests that detailed properties of the target words affect the results in subtle ways. From a practical standpoint, the results suggest that it would be worthwhile to develop ways to improve learners’ skills in listening to these distinctions.

References

- Tajima, K., Erickson, D., and Nagao, K. (2003). Production of syllable structure in a second language: Factors affecting vowel epenthesis in Japanese-accented English. In Burleson, D., Dillon, C., and Port, R. (eds.), Indiana University Working Papers in Linguistics 4, Speech Prosody and Timing: Dynamic Aspects of Speech. IULC Publications.

- Tajima, K. (2004). Stimuus-related effects on the perception of syllables in second-language speech. Bulletin of the Faculty of Letters, vol. 49, Hosei University.

- Dupoux, E., Kakehi, K., Hirose, Y., Pallier, C., and Mehler, J. (1999). Epenthetic vowels in Japanese: A perceptual illusion? Journal of Experimental Psychology: Human Perception and Performance, 25, 1568-1578.