2aSPa8 and 4aSP6 – Safe and Sound – Using acoustics to improve the safety of autonomous vehicles

Eoin A King – eoking@hartford.edu

Akin Tatoglu

Digno Iglesias

Anthony Matriss

Ethan Wagner

Department of Mechanical Engineering

University of Hartford

200 Bloomfield Avenue

West Hartford, CT 06117

Popular version of papers 2aSPa8 and 4aSP6

Presented Tuesday and Thursday morning, May 14 & 16, 2019

177th ASA Meeting, Louisville, KY

Introduction

In cities across the world everyday, people use and process acoustic alerts to safely interact in and amongst traffic; drivers listen for emergency sirens from police cars or fire engines, or the sounding of a horn to warn of an impending collision, while pedestrians listen for cars when crossing a road – a city is full of sounds with meaning, and these sounds make the city a safer place.

Future cities will see the large-scale deployment of (semi-) autonomous vehicles (AVs). AV technology is quickly becoming a reality, however, the manner in which AVs and other vehicles will coexist and communicate with one another is still unclear, especially during the prolonged period of mixed vehicles sharing the road. In particular, the manner in which Autonomous Vehicles can use acoustic cues to supplement their decision-making process is an area that needs development.

The research presented here aims to identify the meaning behind specific sounds in a city related to safety. We are developing methodologies to recognize and locate acoustic alerts in cities and use this information to inform the decision-making process of all road users, with particular emphasis on Autonomous Vehicles. Initially we aim to define a new set of audio-visual detection and localization tools to identify the location of a rapidly approaching emergency vehicle. In short we are trying to develop the ‘ears’ to complement the ‘eyes’ already present on autonomous vehicles.

Test Set-Up

For our initial tests we developed a low cost array consisting of two linear arrays of 4 MEMS microphones. The array was used in conjunction with a mobile robot equipped with visual sensors as shown in Fig. 1. Our array acquired acoustic signals that were analyzed to i) identify the presence of an emergency siren, and then ii) determine the location of the sound source (which was occasionally behind an obstacle). Initially our tests were conducted in the controlled setting of an anechoic chamber.

Picture 1: Test Robot with Acoustic Array

Step 1: Using convolutional neural networks for the detection of an emergency siren

Using advanced machine learning techniques, it has become possible to ‘teach’ a machine (or a vehicle) to recognize certain sounds. We used a deep layer Convolutional Neural Network (CNN) and trained it to recognize emergency sirens in real time, with 99.5% accuracy in test audio signals.

Step 2: Identifying the location of the source of the emergency siren

Once an emergency sound has been detected, it must be rapidly localized. This is a complex task in a city environment, due to moving sources, reflections from buildings, other noise sources, etc. However, by combining acoustic results with information acquired from the visual sensors already present on an autonomous vehicle, it will be possible to identify the location of a sound source. In our research, we modified an existing direction-of-arrival algorithm to report a number of sound source directions, arising from multiple reflections in the environment (i.e. every reflection is recognized as an individual source). These results can be combined with the 3D map of the area acquired from the robot’s visual sensors. A reverse ray tracing approach can then be used to triangulate the likely position of the source.

Picture 2: Example test results. Note in this test our array indicates a source at approximately 30o and another at approximately -60o.

Picture 3: Ray Trace Method. Note, by tracing the path of the estimated angles, both reflected and direct, the approximate source location can be triangulated.

Video explaining theory.

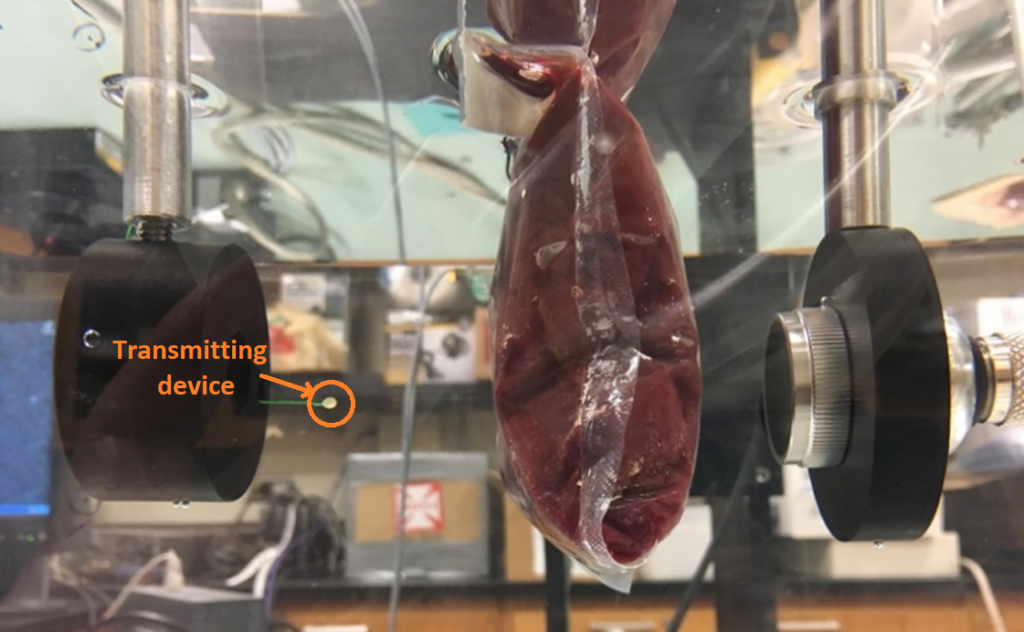

Figure 1: Fabricated prototype of origami folded frequency selective surface made of a folded plastic sheet and copper prints, ready to be tested in an anechoic chamber – a room padded with radio-wave-absorbing foam pyramids.

Figure 1: Fabricated prototype of origami folded frequency selective surface made of a folded plastic sheet and copper prints, ready to be tested in an anechoic chamber – a room padded with radio-wave-absorbing foam pyramids. Introduction

Introduction