Aniruddh D. Patel - apatel@nsi.edu

John R. Iversen

Jason C. Rosenberg

The Neurosciences Institute

10640 John Jay Hopkins Drive

San Diego, CA 92121

http://www.nsi.edu/users/patel

Popular version of paper 5aSC13

Presented Friday morning, November 19, 2004

148th ASA Meeting, San Diego, CA

What makes the music of Sir Edward Elgar sound so distinctively English? (Think

of Pomp and Circumstance, the march which accompanies many graduation

ceremonies.) Similarly, what makes the music of Debussy sound so French? Some

musicologists and linguists have argued that "national character"

in music stems from a relationship between music and speech. Specifically, they

have suggested that instrumental classical music can reflect the melody and

rhythm of a composer's native language (speech "prosody"). Evidence

for this idea, however, has been largely anecdotal. We have addressed this issue

with new methods which allow us to directly compare rhythm and melody in speech

and music. Focusing on British English and French, we find that instrumental

music in these two cultures does indeed reflect the prosody of the national

language. Thus the language we speak may influence the music our culture produces.

Languages differ not only in the words and speech sounds they employ, but also

in their rhythm (temporal and accentual patterning) and in the way voice pitch

rises and falls across phrases and sentences ("intonation," which

refers to speech melody rather than speech rhythm). Linguists have long claimed

that English and French have distinct rhythmic and intonational properties,

but it has been difficult to find quantitative measures which distinguish between

the prosody of the two languages Fortunately, new methods for studying language

rhythm have recently been proposed, and one such method has proven quite fruitful

for comparing language and music. It is best illustrated by way of an example.

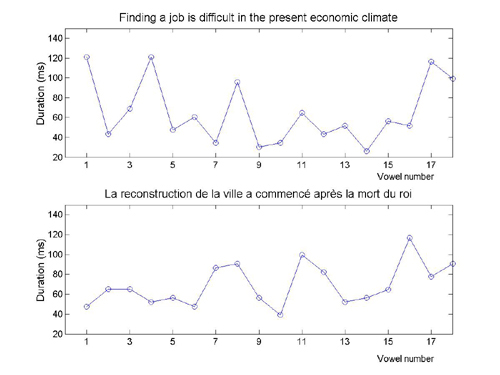

Consider Figure 1, which shows the pattern of vowel duration in a sentence of

British English and a sentence of continental French. These sentences can be

heard in Sound Examples 1 and 2.

The sentences in Figure 1 are ordinary news-like utterances read by native

speakers of each language. Each panel of the figure shows the duration of successive

vowels in the corresponding sentence (in milliseconds). Thus in the top panel,

the first data point represents the duration of the first vowel in the word

"Finding," which is about 120 milliseconds (ms) long, the second point

is the duration of the second vowel in "finding," which is about 40

ms long, and so on. The key thing to notice is that adjacent vowels in the English

sentence tend to differ more in duration than do adjacent vowels in the French

sentence. That is, in the English sentence it is quite common for a relatively

long vowel to be followed by a relatively short one (or vice versa), while in

the French sentence the duration of neighboring vowels tends to be more similar.

Speech researchers recently developed a way to quantify the average degree of

durational contrast between neighboring vowels in a sentence, called the "normalized

Pairwise Variability Index" or nPVI2,3. It turns out that this value is

significantly higher, on average, in British than in French sentences4. This

likely reflects the greater durational disparities in English than in French

between vowels in stressed and unstressed syllables5.

Since the nPVI can be applied to any sequence of durations, it can also be applied

to a sequence of musical notes, such as a theme from a symphony by Elgar or

from a piece by Debussy. In a previous study, we applied the nPVI to the instrumental

music of 16 British and French composers6, analyzing several hundred themes

from a well-known musicological sourcebook7. We focused on composers who lived

and worked around the turn of the 20th century, as this is considered a period

of musical nationalism. We found that the nPVI of English music was significantly

higher, on average, than that of French music. In less formal terms, English

music rhythmically "swings" more than French music, just as English

speech rhythmically swings more than French speech.

Having found a parallel between rhythm in speech and music, we recently turned

our attention to melodic issues. Using the same databases of speech and music,

we asked if there was a difference between English and French intonation which

was reflected in the music of the two nations. When studying rhythm, we had

the benefit of an existing quantitative measure (the nPVI) which differentiated

between English and French speech and which could be applied to music in a straightforward

way. In the case of intonation, no such measure was available. Thus we devised

a novel way of quantifying melodic patterns in speech, using a method which

could be adapted to music.

Although the physical manifestation of melody in speech - the fundamental frequency

of the voice - moves by continuous glides rather than by discrete frequency

steps, some speech scientists have suggested that speech melody is perceived

as a sequence of distinct syllable pitches8. We used a simplified

method to compute each syllable's perceived pitch, based on the mean fundamental

frequency of each vowel, since vowels form the core of syllables. Thus for each

sentence in our database, we converted the continuous fundamental frequency

contour of the sentence into a sequence of discrete frequencies which we call

"vowel pitches." (Note that these pitches do not conform to any musical

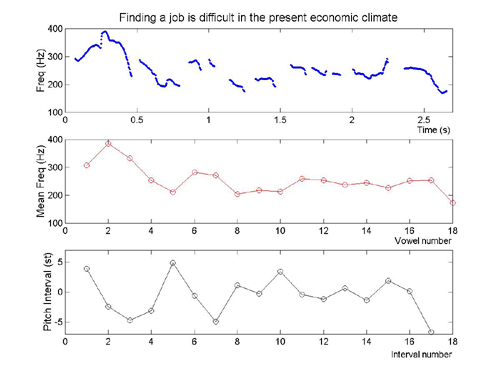

scale.) The top panel of Figure 2 shows the original fundamental frequency contour

of the English sentence of Figure 1 and Sound Example

1. The middle panels shows the sequence of vowel pitches for this sentence:

i.e. the pitch of the voice goes up from the first to the second vowel of "Finding,"

and then goes down for the next three vowels in "a job is," and so

on.

Just as one can compute pitch intervals between notes in a musical melody,

one can also compute pitch intervals between successive vowel pitches in a sentence.

The bottom panel of Figure 2 shows the intervals between the successive vowel

pitches of the example sentence, in units of semitones. (A semitone is the pitch

distance between an adjacent black and white key on a piano.) Note how the interval

between the first and the second vowel of "Finding" is an upward leap

of about 4 semitones, while the interval between the second vowel of "Finding"

and the following vowel ("a") is a downward step of about 2.5 semitones,

and so on. Unlike on a piano, vowel pitch intervals are not constrained to whole-numbers

of semitones: these intervals can fall between the cracks of standard Western

musical intervals. References 1. Nazzi, T., Bertoncini, J., & Mehler, J. (1998). Language

discrimination in newborns: Toward an understanding of the role of rhythm.

Journal of Experimental Psychology: Human Perception and Performance, 24 (3),

756-777. 2. Low E.L., Grabe, E., & Nolan, F. (2000). Quantitative

characterizations of speech rhythm: Syllable-timing in Singapore English.

Language & Speech, 43 (4), 377-401. 3. Grabe, E. and Low, E.L. (2002) Durational variability in

speech and the rhythm class hypothesis. In C. Gussenhoven & N. Warner

(Eds.), Laboratory Phonology 7 (pp. 515-546). Berlin: Mouton de Gruyter. 4. Ramus, F. (2002). Acoustic correlates of linguistic rhythm:

Perspectives. In: Proceedings of Speech Prosody, Aix-en-Provence, 115-120. 5. Dauer, R.M. (1983). Stress-timing and syllable-timing reanalyzed.

Journal of Phonetics, 11, 51-62. 6. Patel, A.D. & Daniele, J. (2003). An empirical comparison

of rhythm in language and music. Cognition, 87, B35-B45. 7. Barlow, H. & Morgenstern, S. (1983). A Dictionary of

Musical Themes, Revised Edition. London: Faber & Faber. 8. d'Alessandro, C. & Mertens, P. (1995). Automatic pitch

contour stylization using a model of tonal perception. Computer Speech and

Language, 9 (3), 257-288.

Figure 2. The speech melody of an English sentence. The top panel

shows the original fundamental frequency contour of the voice, the middle panel

shows successive vowel pitch values, and the bottom panel shows vowel pitch

intervals (in semitones). The sentence can be heard in Sound

Example 1.

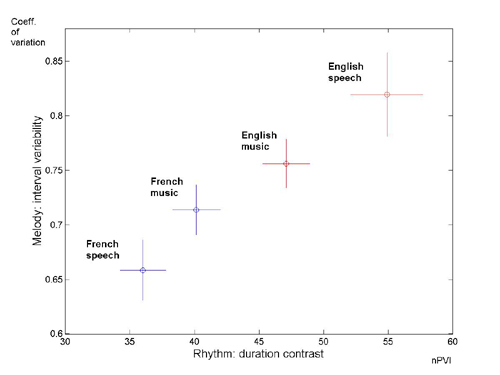

We quantified the pattern of vowel pitch intervals for each sentence in our

database, and found that the average vowel-to-vowel pitch movement was about

the same size in English and French sentences (about 2 semitones). However,

the intervals in French sentences were significantly less variable in size than

in English sentences. Put another way, as the voice moves from one vowel to

the next in a sentence, the size of each pitch change is more uniform in French

than in English speech.

To see if this difference between the two languages was reflected in music,

we turned to our database of turn-of-the-century composers, and examined the

same set of musical themes which we had previously analyzed for rhythm. For

each theme we measured the pitch intervals between successive notes (in semitones),

and then computed the variability of these intervals, just as we had done for

speech. Our findings paralleled what we found in speech: interval sizes in French

themes were significantly less variable than in English themes. As the melody

moves from one note to the next in musical themes, the size of each pitch change

is more uniform in French than in English music.

Our results are shown in Figure 3, which combines our findings on rhythm and

melody in speech and music. The x-axis shows rhythm (the degree of durational

contrast between vowels in sentences or notes in musical themes), while the

y-axis shows melody (pitch interval variability between vowels or notes). By

plotting rhythm and melody together, a striking pattern emerges, suggesting

that a nation's language exerts a "gravitational pull" on the structure

of its music.

Supported by Neurosciences Research Foundation as part of its

program on music and the brain at The Neurosciences Institute, where ADP is

the Esther J. Burnham Fellow and JRI is the Karp Foundation Fellow. We thank

J. Burton, B. Repp, J. Saffran & B. Stein for comments.

Figure 3. Rhythm and melody in British English and French speech

and music. The x-axis shows the degree of durational contrast between successive

vowels/notes in sentences/musical themes (measured using the nPVI), while the

y-axis shows the variability in the size of pitch movements between successive

vowels/notes in sentences/themes (measured using the coefficient of variation

of absolute interval size). English data are shown in red and French data in

blue. Circles represent mean values for speech or music, and lines are standard

errors. English composers studied were: Bax, Delius, Elgar, Holst, Ireland,

and Vaughan Williams. French composers studied were: Debussy, D'Indy, Fauré,

Honegger, Ibert, Milhaud, Poulenc, Ravel, Roussel, and Saint-Saëns.