Ingo Hertrich - ingo.hertrich@uni-tuebingen.de

Hermann Ackermann

Department of General Neurology

Hertie Institute for Clinical Brain Research

University of Tuebingen, Hoppe-Seyler-Str. 3

Tuebingen, Germany, D-72076

Popular version of paper 1pSC7

Presented Monday afternoon, May 4, 2007

153rd ASA Meeting, Salt Lake City, UT

Our brain maps incoming acoustic speech signals onto sound- and language-based representations at rather early stages of central-auditory processing, even in the absence of attention directed toward the auditory channel [1,2], indicating a fast and highly automatized mechanism of encoding features in speech. Furthermore, it is well established that our perceptual system is able to integrate information across different sensory sources. Examples are cross-modal illusions such as the McGurk effect (acoustic /ba/ paired with a video displaying articulation of /ga/, resulting in perceived /da/). The time it takes for the auditory system to integrate these different sensory sources exceeds 150 ms, as indicated by the stability of cross-modal interactions when one stimulus channel is time-shifted against the other [3]. Considering the association of fast pre-attentive categorization, on the one hand, with a broad cross-modal temporal tolerance on the other, at least two subsequent stages of speech encoding prior to the generation of a perceived sound must be postulated: fast sensory-specific feature detection followed by cross-modal sensory memory operations.

The time course of these processes can be investigated with high temporal resolution by means of magnetoencephalography (MEG). In general, any acoustic event evokes an MEG response comprising two characteristic peaks, one at about 50 ms (M50 magnetic field) and one with inverse polarity at 100 ms (M100). The source of this activity has been localized within the cortical auditory system [4]. The present whole-head MEG study investigated cross-modal interactions during early phases of audiovisual (AV) speech perception, considering the impact of visual stimulation on auditorily evoked M50 and M100 fields. In order to address the question of speech-specific visual effects, the experiment included all four combinations of auditory and visual speech and non-speech conditions. Acoustic speech stimuli comprised an ambiguous syllable perceived as /pa/ or /ta/, depending on whether it is paired with a video displaying articulation of the respective syllable. The visual articulatory cues preceded the acoustic signal by approximately 150 ms (lip closing for /p/ articulation occurs prior to the acoustic burst of the stop consonant). The acoustic and visual non-speech control conditions were complex tone signals and concentrically moving circles (large or small movements, in analogy to the size of lip excursions during /pa/ and /ta/ articulation), respectively.

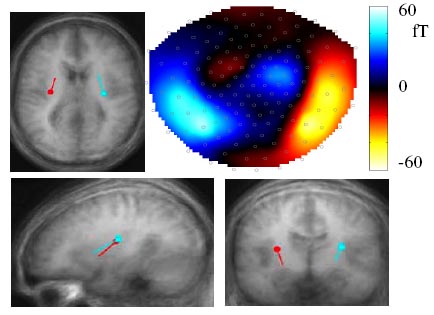

Applying a six-dipole model (three in each hemisphere) of simulated electrical activity in order to estimate the sources of evoked MEG responses, one could separate auditory M50 / M100 activity (A110, peaking 110 ms after acoustic signal onset) from early (V170, peaking 170 ms after motion onset) and later visual activations (V270, approximately coinciding with A110). In order to identify the relevant brain regions, these dipoles were superimposed upon an anatomical magnetic resonance brain image averaged across 17 subjects. As expected, the auditory dipole was well localized within the region of the auditory cortex. The V170 dipole could be attributed to a medial occipital part of the visual system whereas the V270 source was fitted, on the basis of minimizing residual statistical variance, to the region of the posterior insula (Fig. 1).

Figure 1. MEG brain map (upper right panel) of the V270 field, concomitant with the anatomical location of the dipole sources (data based on group averages). MRI slices correspond to the left-hemisphere dipoles.

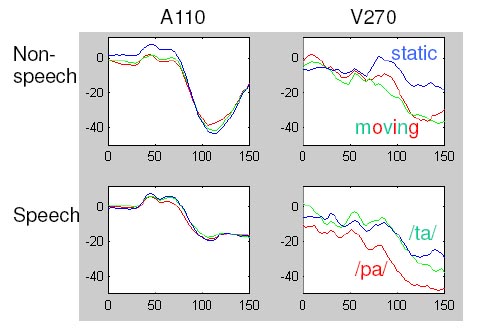

Figure 1. MEG brain map (upper right panel) of the V270 field, concomitant with the anatomical location of the dipole sources (data based on group averages). MRI slices correspond to the left-hemisphere dipoles. As concerns the M100 field, visual non-speech stimuli had an attenuating effect upon the strength of the auditory dipole that depended on the presence of an acoustic signal (upper left panel of Figure 2). By contrast, visual speech gave rise to hypoadditive M100 enhancement, that is, in the absence of an acoustic signal visual speech motion evoked a response resembling the auditory M100 (not shown in a figure) whereas in case of audiovisual stimuli the M100 was not enhanced by the visual channel (lower left panel of Figure 2). Audiovisual interactions working at the level of the cortical auditory system, thus, are speech-unspecific up to a latency of about 50 ms after the onset of the acoustic speech signal whereas at a latency of 100 ms the responses to speech and non-speech visual motion cues are largely different.

Considering the visually evoked response components outside the auditory system that were modeled by the V270 dipoles, small and large visual non-speech motion stimuli had a similar influence as visual /pa/ whereas the magnetic response to visual /ta/ seemed to be selectively suppressed (right panels of Figure 2). This all-or-nothing effect can be interpreted as a kind of threshold mechanism transforming the visual signal into a binary code. The fact that visual /ta/ did not pass the threshold, although the lip motion was clearly visible, might be related to the phonological status of tongue tip in contrast to lip place of articulation. In phonological models, the 'coronal' place of articulation, i.e., the articulatory feature of speech sounds produced with the frontal part of the tongue (such as /d/, /t/, or /n/), has been considered as 'unmarked' and 'underspecified,' in contrast to labial (e.g., /b/, /p/, or /m/) or tongue-dorsal articulation (/g/, /k/, or /ng/) [7]. In other words, the coronal feature does not require an explicit specification but can be set as a default. Thus, the observed categorical-like suppression of responses to visual /ta/ might indicate a sub-threshold status of visual speech information that corresponds to underspecified phonological (sound-based) features. Since the magnetic source of this effect was located within the insular cortex rather than the auditory system, we may assume that at the time of M100 the visual phonetic information, although transformed into a binary code, has not yet been integrated into an auditory/phonetic representation.

References

Näätänen, R., Lehtokoski, A., Lennes, M., Cheour, M., Huotilainen, M., Iivonen, A., Vainio, M., Alku, P., Ilmoniemi, R.J., Luuk, A., Allik, J., Sinkkonen, J., Alho, K. (1997). Language-specific phoneme representations revealed by electric and magnetic brain responses. Nature, 385, 432-434.

Winkler, I., Lehtokoski, A., Alku, P., Vainio, M., Czigler, I., Csepe, V., Aaltonen, O., Raimo, I., Alho, K., Lang, H., Iivonen, A., Näätanen, R. (1999). Pre-attentive detection of vowel contrasts utilizes both phonetic and auditory memory representations. Cogn. Brain Res., 7, 357-369.

Munhall, K.G., Gribble, P., Sacco, L., Ward, M. (1996). Temporal constraints on the McGurk effect. Percept. Psychophys., 58, 351-362.

Liégeois-Chauvel, C., Musokino, A., Badier, J.M. Marquis, P., Chauvel P. (1994). Evoked potentials recorded from the auditory cortex in man: evaluation and topography of the middle latency components”, Electroencephalogr. Clin. Neurophysiol., 92, 204-214.

Lebib, R., Papo, D., De Bode, S., Baudonniere, P.M. (2003). Evidence of a visual-to-auditory cross-modal sensory gating phenomenon as reflected by the human P50 event-related brain potential modulation. Neurosci. Lett. 341, 185-188

Driver, J., Frith, C. (2000). Shifting baseline in attention research. Nature Rev. Neurosci., 1, 147-148.

Avery, P., Rice, K. (1989). Segment structure and coronal underspecification. Phonology, 6, 179-200.