Learning from How Our Ear Processes Complex Sounds

to Design an Acoustical Vehicle Classifier

Geok Lian Oh - ogeoklia@dso.org.sg

DSO National Laboratories

20 Science Park Drive

Singapore 118230

Popular version of paper 1pSPa2

Presented Monday afternoon, June 4, 2007

153rd ASA Meeting, Salt Lake City, Utah

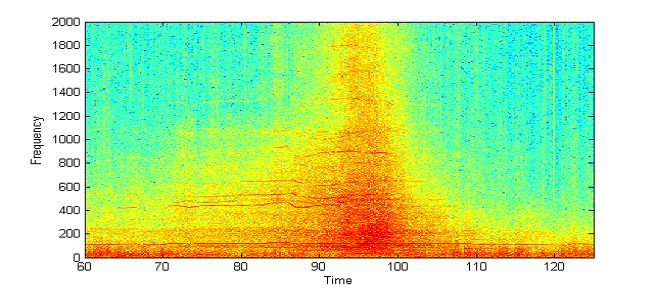

The spectral content of sounds emitted by a moving vehicle contains wideband processes (processes containing energies over a wide frequency area), and harmonics (integral multiples of the fundamental frequency). The fundamental frequency is what we perceive as the pitch of the sound. These characteristics of vehicle sounds are easily captured by plotting a time-frequency graph, which displays the frequency content of local sections of the signal as it changes with time; see Figure 1. The harmonic patterns are useful signatures that are often used for fingerprinting the vehicle types.

Figure 1: Time-frequency graph of the acoustic signal received by a microphone as a vehicle drives past [1]

Several methods have been proposed in the literature to extract the harmonic patterns for classification of vehicles into different classes. One method extracts the positions of the harmonic lines where dominant energies occur and uses them as features for classification. But this method suffers from having "too-fine" resolution which introduces variability in the features should the position of the harmonic lines shift. For example, we know that the pitch of the sound emitted by a vehicle increases if the driver increases his speed. We propose another method to overcome the problem by using energies of the vehicle sound in frequency bands as features for identification. But this method now suffers from "too-coarse" spectral fingerprinting. All these traditional approaches seem to suffer from one disadvantage or another when extracting the features for the vehicle identification task.

We have decided that we could learn from the way our ears process information captured in the sounds. In their paper, Dau et al. [2.3] proposed a model which described the "effective" signal processing that takes place in our auditory system. The model splits the incoming sound into different frequency bands simulating the frequency-place transformation on the basilar membrane, a part of the inner ear that vibrates in response to sound waves. The basilar membrane has limited resolution for higher-frequency components that is the higher-frequency harmonic components would be processed together in one frequency channel. They simulate the same group of hair cells and are not separated spectrally within the auditory system. The question to ask is how then do our ears detect the presence of harmonics within these coarse frequency bands? It turns out that two adjacent components of the harmonic sound which falls within a similar frequency band interact to produce a form of amplitude modulation (see footnote for additional explanation). The amplitude modulation changes at a frequency equal the fundamental frequency of the harmonic sound. Our ears overcome the disadvantage of poor spectral resolution in the higher-frequency band by simply detecting the temporal interaction between the spectrally unresolved components. A linear filter-bank is introduced by Dau et al. which models how our ears further analyze these amplitude modulations by separating the rate of changes into several components, each one carrying a single frequency sub-band of the original signal.

We learn from our human auditory processing system described above, and propose the following methodology to extract the features to classify vehicles. The incoming sound will pass through a basilar-membrane filtering model, and then the amplitude modulations of the filtered sound are detected. This is followed by a filtering process which extracts the detected amplitude modulations with rate of change occurring within a frequency band specific to vehicle sounds. We call the features extracted by our method the modulation spectral features. The modulation spectral features have the following advantages. The extraction of modulation spectral features within frequency bands of finite bandwidth implies the features are less sensitive to pitch change caused by different speeds of vehicle. The harmonic pattern is encoded in the feature vector simply through detection of the frequency of the amplitude modulation.

Initial classification tests of our approach suggest that we are on the right track. Based on approximately 20 sets of vehicle data for each class analyzed, we have shown that we are able to obtain a reasonably good classification rate. In our ongoing studies we will continue to further test the approach with more sets of vehicle data, and thereby explore the robustness of the method. So to conclude, next time you are near the road, perhaps you can try using your ears to perform your own classification test!

References

[1] M. F. Duarte and Y. H. Hu, Vehicle classification in distributed sensor networks, Journal of Parallel and Distributed Computing, Vol. 64 No. 7, July 2004, p. 826-838.

[2] Dau T., Kollmeier B., Kohlrausch A. (1997a), "Modelling auditory processing of amplitude modulations I. Modulation detection and masking with narrowband carriers," Acoustical Soc. Am. 102, 2892-2905.

[3] Dau T., Kollmeier B., Kohlrausch A. (1997b), "Modelling auditory processing of amplitude modulations II. Spectral and temporal integration in modulation detection," Acoustical Soc. Am. 102, 2906-2919.

Footnote:

Amplitude modulations are the rate of change of the envelope of the sound. Here one needs to be careful to distinguish between the fine structure of sound, and the envelope of sound. The former refers to the variations in the instantaneous pressure amplitude of the sound wave, whereas envelope of sound refers to the slower overall changes in pressure amplitude of the sound wave.