Echolocation and spatial hearing

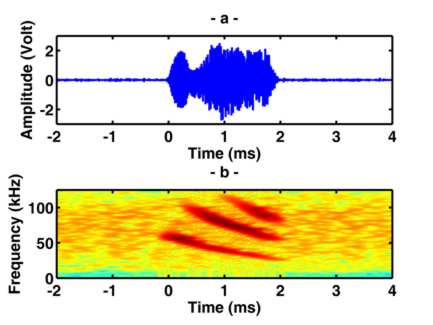

Echolocating bats emit brief ultrasound pulses and listen to the echoes reflected from objects surrounding

them for navigation and prey detection and capture. Bats can determine relative positions of the echo sources

(objects) in terms of distance and direction. Distance is measured as a function of the time elapsed

between the outgoing sound and the incoming echo. To determine direction, bats use the acoustic cues in echo signals

received at each ear. The focus of this paper is on how bats extract

directional properties of echoes.

How do animals localize sound?

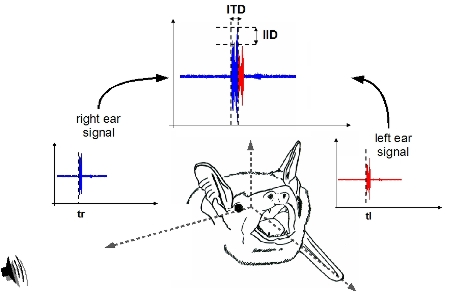

Sounds impinging on the ears are transformed by the

shape of the head and ears. External ears, acting like antennas,

amplify or weaken sounds based on their

direction of arrival. These transformations create acoustical cues

that the animal’s brain can use to

determine sound source location in space. For example: sounds coming

from the right will be received

earlier and with larger intensity at the right ear. These interaural

differences in signal

intensity and arrival time (IID and ITD) decrease as the sound source

moves towards the front and increase

in the opposite direction as it reaches the left side. Therefore,

the auditory system in the brain can use IID and ITD as cues for

horizontal position of the sound source. For echolocating bats IID cues

can also be

used for the localization of the vertical direction –

provided that acoustic signals are wideband [6, 5, 1]. Other direction-dependent features due

to directional modifications can be found in the spectrum of the acoustic signals at each

ears.

Echolocating bats and active sound localization

Bats share similar computational scheme

for passive sound localization with other mammals. But the echoes they detect partly depend on the spectral and

spatial properties of the

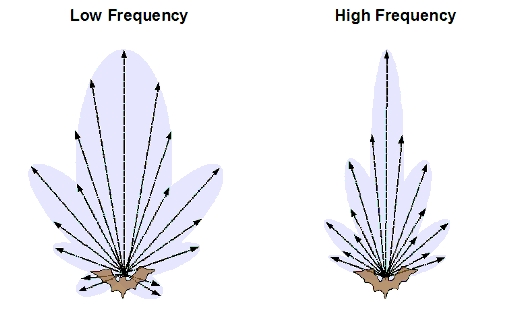

echolocation pulses. Bats ensonify the space in front of them to probe

their environment. Acoustic pulses

generated by bats propagate towards different directions with different

intensities. Most bats use echolocation

pulses comprised of multiple frequencies. Sound beams generated at high

frequency are directionally

more focused, whereas sound beams generated at low frequencies are

directionally more diffused. Low

frequency sounds are not reflected easily from small objects (like

insects) and travel further. In contrast, high

frequency sounds decay faster yet are easily reflected by the small

objects. Information extracted from echoes containing multiple frequencies provides bats high resolution

representation of their environment. Measurements of sonar emission patterns from unrestrained and freely

vocalizing bats show variation in the echolocation beam shape across

individual vocalizations [3]. Consequently, the bat auditory

system needs to dynamically adapt its computations to accurately localize objects using changing acoustic

inputs.

Statement of the Problem

It is largely accepted that the computation of the

sound localization by the auditory system is based solely on the

acoustic signals received at the ears. Schemes of how the

brain might compute sound location are thus

mostly limited to identifying acoustic cues that map the signal

arriving at the ears to a location in space. Like many other

mammals, bats can move their ears, and therefore acoustic cues for

sound localization even change with ear movements. To accurately

compute the direction of the echo-return, the auditory system

also needs to take ear position into account. Non-auditory signals,

carrying information

about ear position and beam shape, are therefore required for auditory

localization of sound

sources. The auditory system must learn associations between these

extra-auditory signals (e.g. head

and ear position) and the acoustic spatial cues. These demands on the

nervous system suggest

that sound localization is achieved through the interaction of

behavioral control and acoustic

inputs.

Standard approaches that rely on acoustic information alone cannot explain the effects of this

interaction. Moreover, unlike the approach we propose here, by taking an outside observer’s point of view,

they do not address how the auditory system ever acquires the knowledge of the spatial coordinates to

utilize these acoustic cues. We propose a sensorimotor approach to the general problem of sound

localization, with an emphasis on learning that allows spatial information to be acquired and refined by a

naive organism. Thus, the sensorimotor approach does not require prior knowledge of the space by the

system.

A sensorimotor approach for sound localization

Our goal is to show that merely from an

organism’s observations of the sensory consequences of its

self-generated

motions, there is sufficient information to capture the spatial

properties of sounds. The goal is not to find a way

to match acoustic inputs to corresponding spatial parameters but rather

show how the animal could learn the spatial properties of the acoustic

inputs. Normal sensory development requires relevant sensory

inputs

and self-generated actions are also essential for the development of

spatial perception [4] and to adapt

to changes that might occur after its acquisition [7]. Perceptual spatiality of sound sources

is achieved under unnatural acoustic conditions only if a relation between auditory

signals and self-generated motion existed [8].

From an animal’s point of view, the

environment

surrounding the animal is stable as it moves within the environment.

This requires the ability to distinguish sensory input

changes caused by self-generated movements from those that are the

result of changes in the

environment. This can be achieved with the proprioceptive (sense of the

relative positions of the body parts) information, plus the ability to

predict sensory consequences of the organism’s actions, that is,

sensorimotor expertise. We

assert that sensorimotor early experience, during development, is

necessary for accurate sound

localization.

The sensorimotor approach [2]

is based on premises. We first assume

that the organisms are initially unaware of the spatial properties of

the sound sources. Second, we limit the

external sensory information to auditory signals only (e.g. not to

visual input). Third, we postulate an interaction between the

auditory system and the organism’s motor state, that is,

proprioception and motor actions. The first two premises may be viewed

as the worst-case-scenario for sound localization, ignoring any sound

localization

mechanisms that might be hardwired in the brain or aided by vision. But

they don’t significantly constrain

the approach. The third premise, in contrast, is crucial to the

proposed computational

scheme.

Demonstration: Obtaining spatial coordinates by echolocating bats

Our demonstration is a proof of concept. The problem is simplified for the purpose of clarity but does not

effect generality. We make three assumptions:

- We assume that an organism can distinguish the differences between the auditory and

proprioceptive inputs. These inputs can be separated and classified since

auditory inputs can change when the organism is motionless and similarly proprioceptive inputs

can be generated through motion of the body when there is no auditory signal.

- Based on the classification and separability of sensory inputs in these two groups, an organism

can identify the dimension of space through its interaction with the environment [10].

Thus, we also assume that the dimension of auditory space is known.

Auditory space is 2-dimensional since a sound source direction can be

determined by two parameters: horizontal and vertical angles (we ignore

the third dimension of distance to simplify the example).

- We assume that the organism can distinguish the motor actions that can induce a displacement

in the direction of a sound source. An algorithm that demonstrates how a naive system can

identify these special movements is given in [11].

We measured the directional transformations of

sounds due to head and the external ears for big brown

bats. These measurements are used to simulate acoustic signals received

from different directions. Limiting our simulated bat’s movements

to head motions. Simulated sounds with random spectra are

presented from different directions to the artificial bat. When a sound

is present the simulated bat makes three 1o-head movements (up-down, left-to-right, tilt to the right) and records the acoustic inputs

changes in relation to its head movements. These measurements are fed to an unsupervised-learning

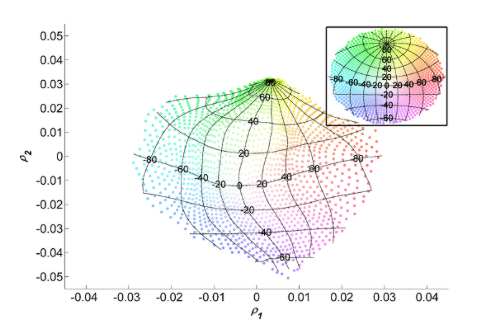

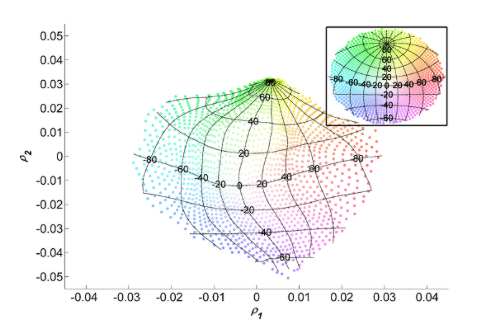

algorithm. The algorithm generates a 2-dimensional representation of the data set, a map (Fig.5). Each point on this

map represents a direction in space. Note that our computational method does not use any

a-priori information about the directions of the sounds – only

the measurements taken by the

simulated bat. Learning of this map implies that our simulated bat can

learn the geometric

relations between the points in space. What identifies a point in space

is the unique relationship

between the acoustic input changes and the known set of head movements

that generate them. Auditory input changes caused by a group of head

movements will be identical even if the sound sources themselves are

not.

| Figure 5: |

Spatial

locations of the presented sounds are depicted as color coded points in

the stereographic projection of the frontal hemisphere (Inset).

The latitude and longitude lines indicating horizontal and vertical coordinates and the color code allow

comparisons with the map obtained from the unsupervised learning method

and the spatial coordinates of the sound sources. Note that

the points on the map obtained with our computational scheme maintain the same neighborhood

relationships of spatial points in

the frontal space of the bat. |

|

Conclusion

We have proposed a computational method for learning the properties of auditory space using sensorimotor theory

[9], a previously unexplored issue of the problem of sound localization. We have argued that a computational theory

of sound localization should be able to explain the experience-dependent nature of the computation as well

as its dependence on other sensory inputs. The computational method described here provides a framework under which

integration of experience-dependent plasticity and multisensory information processing aspects of sound

localization can be achieved. In conclusion, we have shown that a naive organism can

learn to localize sound based solely on dynamic acoustic inputs and their relation to proprioceptive (motor)

states.

References

[1] M. Aytekin, E. Grassi, M. Sahota, and CF. Moss. The bat head-related transfer function

reveals binaural cues for sound localization in azimuth and elevation. J Acoust Soc Am,

116(6):3594–3605, Dec 2004.

[2] M. Aytekin, J. Z. Simon, and C. F. Moss. A sensorimotor approach to sound localization.

Neural Computation. (In press).

[3] M. Aytekin. Sound Localization by Echolocating Bats. PhD thesis, University of

Maryland, College Park, Maryland, May 2007.

[4] J. Campos, D. Anderson, M. Barbu-Roth, E. Hubbard, M. Hertenstein, and

D. Witherington. Travel broadens the mind. Infancy, 1:149–219, 2000.

[5] Z. M. Fuzessery and G. D. Pollak. Neural mechanisms of sound localization in an

echolocating bat. Science, 225(4663):725–728, Aug 1984.

[6] A. D. Grinnell and V. S. Grinnell. Neural correlates of vertical localization by echo-locating

bats. J Physiol, 181(4):830–851, Dec 1965.

[7] R. Held. Shifts in binaural localization after prolong exposures to atypical

combinations of stimuli. Am J Psychol, 68:526–548, 1955.

[8] J. M. Loomis, C. Hebert, and J. G. Cicinelli. Active localization of virtual sounds. J

Acoust Soc Am, 88(4):1757–64, Oct 1990.

[9] J. K. O’Regan and A No. A sensorimotor account of vision and visual consciousness. Behav

Brain Sci, 24(5):939–73; discussion 973–1031, Oct 2001.

[10] D. Philipona, J. K. O’Regan, and J-P. Nadal. Is there something out there? Inferring space

from sensorimotor dependencies. Neural Comput, 15(9):2029–2049, Sep 2003.

[11] D. Philipona, J. K. O’Regan, J-P Nadal, and O. J.-M.D. Coenen.

Perception of the structure of the physical world using multimodal unknown sensors and

effectors. Advances in Neural Information Processing Systems, 15, 2004.

[ Lay Language Paper Index | Press Room ]