Why do Hearing-impaired Listeners Not (always) Enjoy

Cocktail Parties?

Christophe Micheyl - cmicheyl@umn.edu

Andrew J. Oxenham - oxenham@umn.edu

Department of Psychology

University of Minnesota

Minneapolis, MN 55, USA.

Popular version of paper 4aPP2

Presented Thursday morning, April 22, 2010

159th ASA Meeting, Baltimore, Maryland

Imagine yourself

at a cocktail party. You can hear chatter, glasses clinking, and soft ambient

music. But try as you might to focus on what the person next to you is saying,

their voice is extremely difficult to separate from other voices and sounds. Even

piecing together a few snippets of coherent speech out of this jumble of sounds

requires sustained attention, and tremendous effort. This is what individuals

who suffer from hearing loss often experience in situations where multiple

sound sources compete for attention. Understanding the origin of these

selective-listening difficulties, so that they can be addressed more

effectively, is an important goal of current research in psychoacoustics (the

scientific study of auditory perception) and auditory neuroscience (which is concerned

with the biological basis of hearing). Here, we introduce recent findings from

our laboratory, which shed some light on this issue. These findings indicate that

listeners ability to extract information from concurrent sounds can be

predicted, to a large extent, by the frequency resolution of the auditory

sensory organthe cochlea.

To understand

these findings, it is useful to think of the cochlea as a spectrum analyzer: it

breaks down complex sounds (such as musical notes or vowels) into their elementary

components, mapping each frequency to a different place along a vibrating

membranethe basilar membrane. On this membrane sit two types of cells that

both play a crucial role in hearing. The inner hair cells transform mechanical

vibrations of the basilar membrane into electrochemical signals, which are

transmitted to the brain via the auditory nerve. The outer hair cells amplify

the vibrations of the basilar membrane selectively, thereby boosting soft

sounds, while at the same time making the ear more fine-tuned in frequency.

Damage to the outer hair cells (which can result from exposure to loud sounds,

ageing, viruses, and other causes) usually leads to reduced auditory

sensitivity (soft sounds are no longer detected) and to reduced frequency selectivity

(schematically, each place on the basilar membrane now responds to a broader

range of frequencies).

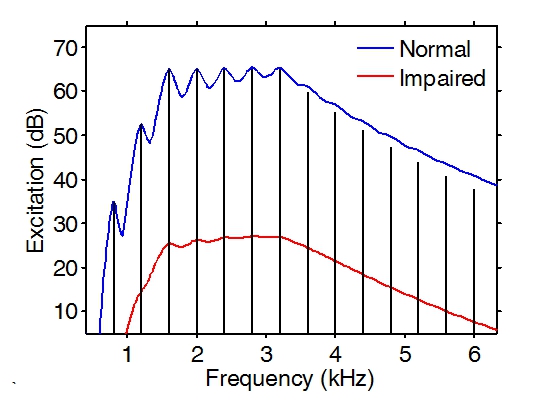

Figure 1

provides some insight into the effect of reduced cochlear frequency selectivity

on the internal representation of sounds in the auditory system. In this

example, the stimulus is a complex tone, that is, a tone that contains multiple

frequencies in it. It could be a musical sound. The frequency components of the

tone are shown as solid black lines; each line represents one component. The

blue and red curves show simulated patterns of auditory excitation in response

to the tone. These patterns were computed using a mathematical model of the ear

(Glasberg and Moore, 1990). They indicate how the spectral components of the

complex tone (solid black lines) are represented inside the auditory system of

a normal-hearing listener (blue curve), and the auditory system of a listener

with a 40-dB hearing loss, and frequency selectivity reduced by a factor of 2 (red

curve). Note how the spectral components of the tone are represented as salient

peaks in the normal excitation pattern, but lost in the impaired case, due to

insufficient frequency resolution.

Figure 1. Patterns of excitation produced by a

harmonic complex tone in a normal auditory system (blue curve), and in an

auditory system with reduced frequency selectivity (red curve).

If you observe

the figure carefully, you will notice that the frequency components of the tone

(solid black lines) are regularly spaced, with frequencies equal to all integer

multiples of 400 Hz. This reveals that this complex tone is harmonic, with a

fundamental frequency (F0) of 400 Hz. Harmonic complex tones are a very

important class of sounds, which encompasses most musical sounds and many

biological communication signalsincluding vowels. They usually have a distinct

pitch, which is determined by the F0the higher the F0, the higher the pitch.

Pitch is a very important auditory attribute. It is used to convey melody in

music, and prosody in speech. In addition, pitch can be used to distinguish

sounds, such as a male voice and a female voice, because male voices usually

have a lower pitch than female voices. Thus, voices and other sounds can be

tracked selectively over time based on their pitch.

In order to

determine whether the presence of salient peaks in auditory excitation patterns

is a good predictor of listeners ability to accurately perceive the pitches of

concurrent harmonic complex tones, we measured pitch-discrimination thresholds

for a harmonic complex tone (target) presented simultaneously with another

harmonic complex (masker) under a wide variety of stimulus conditions. We

then computed auditory excitation patterns for the mixture of target and

masker, and looked for covariations between the magnitude of the peaks in these

patterns, and the thresholds obtained by the listeners. We found that, in all

conditions in which the excitation patterns evoked by the mixture of target and

masker did not contain salient peaks, the listeners thresholds were high, indicating

that the listeners were unable to accurately hear out the pitch of the

target. Conversely, in all conditions in which the excitation patterns evoked

by the mixture of target and masker did contain salient peaks, the thresholds

achieved low discrimination thresholds. Thus, it appears that the presence of

salient peaks in simulated auditory excitation patterns is a good predictor of

listeners ability to accurately hear out the pitches of concurrent sounds.

These findings

lend support to the hypothesis that the fine-grain frequency analysis performed

by the cochlea determines in large part how successful listeners are at analyzing

concurrent sounds perceptually, and that the loss of this fine-grain frequency

analysis following cochlear damages can go a long way toward explaining why hearing-impaired

individuals often have considerable listening difficulties in settings where multiple

sound sources are simultaneously activesuch as cocktail parties.

[Work supported

by NIH grant R01 DC05216.]

Glasberg, B. R.,

and Moore, B. C. J. (1990).

"Derivation of auditory filter shapes from notched-noise data,"

Hearing Res. 47, 103-138.

Micheyl C.,

Keebler, M.V., Oxenham, A.J. (2010) Pitch

perception for mixtures of spectrally overlapping harmonic complex tones, J. Acoust. Soc. Am. (in press)

Micheyl, C., and

Oxenham, A. J. (2010) "Pitch,

harmonicity, and concurrent sound segregation: Psychoacoustical and

neurophysiological findings," Hearing

Res. (in press)