John G. Neuhoff

Department of Psychology

The College of Wooster

Wooster, OH 44691

Cell: 440-670-1401

jneuhoff@wooster.edu

Pascale Lidji

McGill University

Montreal PQ H3A 1B1, Canada

Popular version of paper 5pSC5

Friday Afternoon May 27, 2011

161st ASA Meeting Seattle WA

Music and speech have long been thought to have common cognitive underpinnings, and recent work demonstrates that the music of expert composers reflects the speech rhythm of their native language [H. Ollen (2003), P. Daniele (2003)]. In the current study, monolingual English speaking music novices composed simple “English” and “French” tunes on a piano keyboard. The rhythms produced reflected speech rhythms perceived in English and French, respectively. Yet, the pattern was opposite that produced by expert English and French composers and opposite that predicted by the acoustic determinants of speech rhythm that specify English speech as more rhythmically varied than French. Surprise recognition tests 2 weeks later confirmed that the music speech relationship remained over time. Participants then rated the rhythmic variability of French and English speech samples. We found that native English speakers perceived French as more variable than English despite the measured greater variability of English. Finally, we repeated these procedures with a sample of monolingual French speakers and found similar, but opposite effects. The results suggest that common cognitive underpinnings of music and speech rhythm are more widespread than previously thought, and that novice rhythm production in music is concordant with perceived speech rhythms.

Forty-eight music novices participated in the study. Half of the participants were native English speakers and the other half were native French speakers. We asked them to use only two keys on a keyboard to create and record simple “two-note tunes”. Participants could alternate between notes as often as they liked and in any rhythm that they desired. They created two different tunes, one of the tunes was to be an “English tune” and the other was to be a “French tune”. Next, all of the participants listened to samples of French and English speech and, after instruction, rated the perceived variability in rhythmic syllable stress on a scale of 1 to 100. Finally, two weeks after the initial session, participants were given a surprise “tune recognition task” and asked to identify the “language” of the two songs that they composed and also to pick the songs that they themselves had produced from foil songs produced other participants in the study.

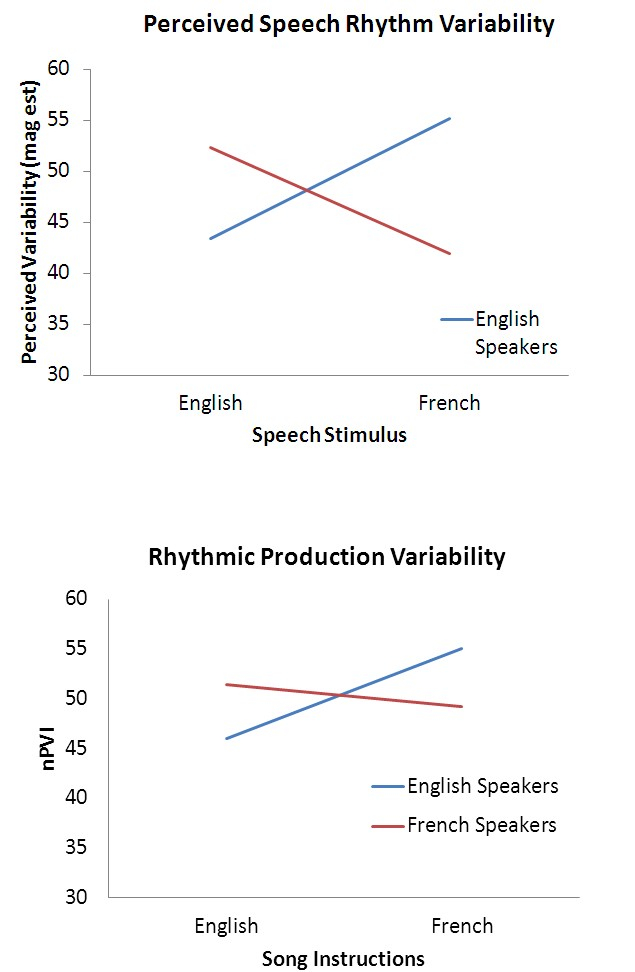

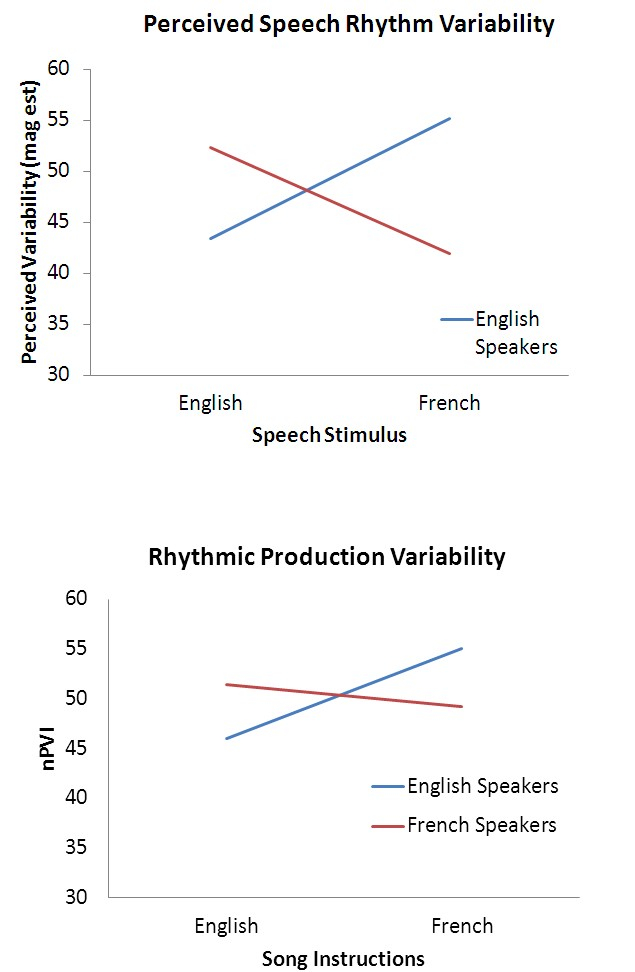

We found a significant difference between French and English speakers in both the perception of speech rhythm and in the production of musical rhythm. English speakers perceive English as less rhythmically variable than French. French speakers showed the opposite pattern. In other words, a speaker’s native language sounds more rhythmically stable. These same patterns are also shown when the music novices were asked to create English and French tunes. English speakers produced English tunes that were more rhythmically stable than the French tunes that they produced. French speakers did not. See Figure 1.

There is growing evidence of interplay between music and speech that may implicate rhythm processing. Music has been shown to be an effective aid in language acquisition (Schön, et al., 2008), and language learning has conversely been shown to enhance statistical learning of musical stimuli (Marcus, Fernandes, & Johnson, 2007). Evidence from stroke victims suggests that the processing prosody in music and speech may share a common neural substrate (Nicholson et al., 2003; Patel, Peretz, Tramo, & Labrecque, 1998). The parallel perception of speech rhythms and production of musical rhythms demonstrated here suggests that rhythm processing in music and speech may share common cognitive processes. Future investigations in this area will begin to provide a foundation for our knowledge of these processes and will provide important insights into the similarities between music and speech.