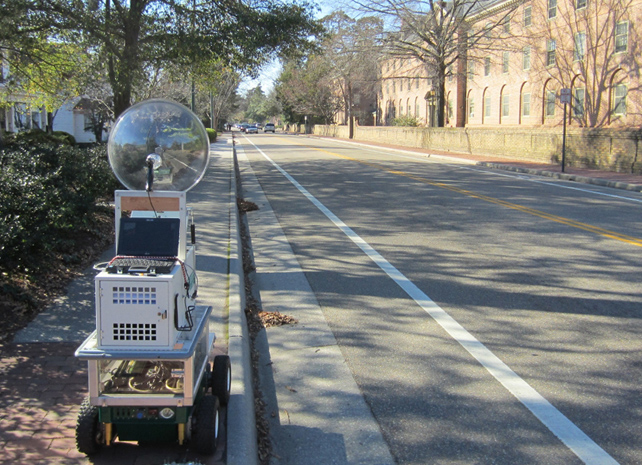

The large parabolic dish microphone atop rMary has been replaced by the Kinect microphone array to collect data from oncoming vehicles.

Eric A. Dieckman and Mark Hinders -- hinders@as.wm.edu

Department of Applied Science

The College of William and Mary

Williamsburg, VA 23187

Popular version of paper 2pSPa5

Presented Tuesday afternoon October 23, 2012

164th ASA Meeting, Kansas City, Missouri

In order to perform useful tasks for us, robots must have the ability to notice, recognize, and respond to objects and events in their environment. For walking-speed robots, short-range sensors provide awareness of their immediate surroundings while long-range sensors allow them to avoid fast-moving threats such as oncoming traffic. No one sensor works well enough in all circumstances, for example, a picture taken outside during a clear day is much more useful than one taken on a foggy night. Combining data from active and passive sensors provides the most robust situational awareness, but also requires sophisticated signal and image processing to deal with large amounts of information. Our previous work used sonar backscatter and thermal imaging to identify and differentiate fixed landmarks such as trees, light poles, fences, walls, and hedges. In our current work, we are testing the combination of thermal infrared (IR) and nonlinear acoustic echolocation sensors to detect and classify oncoming vehicular traffic.

|

The large parabolic dish microphone atop rMary has been replaced by the Kinect microphone array to collect data from oncoming vehicles. |

One of our robotic platforms, rMary (Figure 1), is driven to different locations to collect data from both moving and stationary vehicles, which we categorize as car, SUV, van, truck, bus or motorcycle. A pulse of sound is sent from rMary toward the target vehicle, where it reflects back and is picked up by a microphone array in a Microsoft Kinect unit mounted on rMary. This process is similar to how bats echolocate, and can provide both a distance between the source and the target, and information about the target. We use a nonlinear acoustic parametric array to generate the sound chirp because it allows us to create a narrow beam of sound.

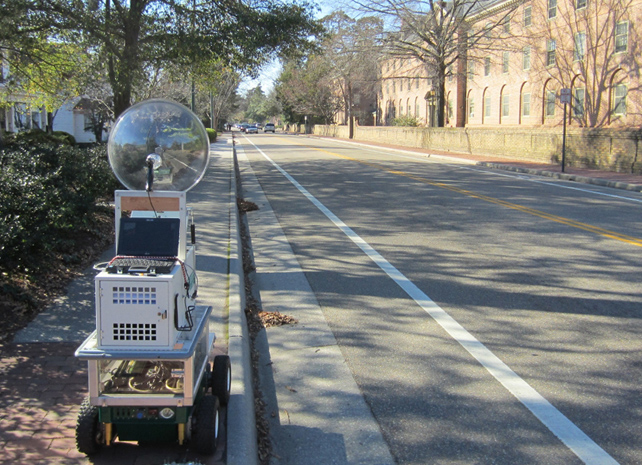

We have collected more than 6,000 of these reflected signals from over 40 sorties in a wide range of environmental conditions. Wavelet fingerprint signal processing techniques allow us to identify subtle differences between the reflected signals of different classes of vehicles (Figure 2). Pattern classification allows us to train a computer to recognize these differences, so that we can automatically categorize an unknown vehicle from the reflected acoustic signal.

|

| A one-second long acoustic signal reflected from a bus (top) is filtered (middle) and transformed into a time-scale image that resembles a set of individual fingerprints (bottom). |

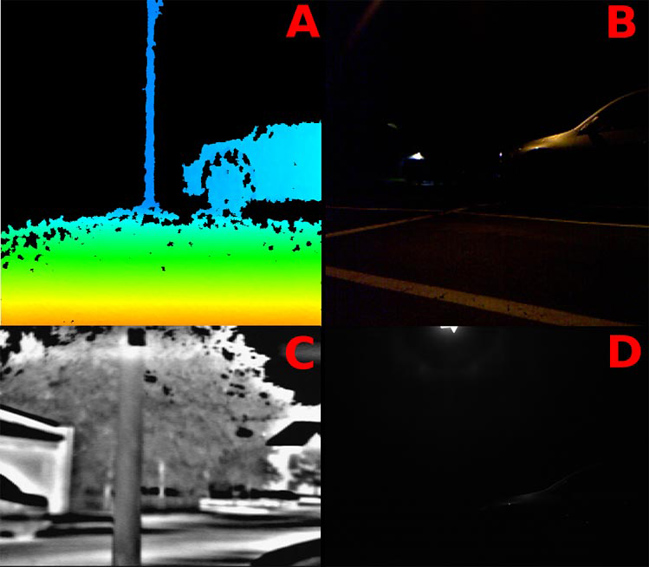

We also collect thermal infrared images with a long-wave infrared camera that measures the heat radiated from a surface. This imagery is fused with the nonlinear acoustic echolocation information as well as the color video and infrared image streams from the Microsoft Kinect. Released in 2010 as an extremely popular accessory for the Xbox 360 console, the Kinect integrates active infrared and color imagers to output 640x480 RGB-D (red, green, blue + depth) video. Optimized for indoor use, the Kinect doesn't work as well outside during the day because sunlight often saturates the IR sensor. However, the Kinect depth mapping (Figure 3, A) works well in nighttime outdoor environments, detecting a light pole not visible in the illuminated RGB image (Figure 3, B). The image from the thermal camera (Figure 3, C) also shows the tree and buildings in the background, but has a smaller field of view and lower resolution than the raw image from the Kinect IR sensor (Figure 3, D).

|

| In nighttime outdoor environments, the Kinect depth mapping (A) detects objects not visible in the illuminated RGB image (B), and the thermal camera (C) provides more information than the Kinect IR sensor (D) (images resized from original resolutions). |