Martin Lenhardt- lenhardt@vcu.edu

Virginia Commonwealth University

Richmond VA 23298-0168

Popular version of paper 3aMU7

Presented Wednesday Morning, October 19, 2005

150th ASA Meeting/NOISE-CON 2005, Minneapolis, MN

Imagine sitting in Carnegie Hall, and a sheer illuminated curtain is drawn such you cannot see the stage.Your task is to choose which source of music, emanating from either stage right or stage left is the better of the two. On one side is a live orchestra; on the other, a high-fidelity digital sound system. Many times they seem equal, but depending upon the music, there are occasions when the digital system is found lacking in something. But why would this be?

In the 1930s the frequency range of music needed for faithful reproduction, without any perceived loss of quality, was thought not to extend to 14,000 Hz. The modern-day upper range of frequency reproduction to ensure quality is now a little over 20,000 Hz thanks to digital recordings with a sampling rate of 44,100 Hz. The sound-reproduction upper frequency limit is about half the sampling rate, a value termed the Nyquist frequency. There are higher sampling rates available as 88 or 96 kHz, but how could such a high-frequency response enhance music appreciation when we don't generally hear much above 20,000 Hz?

Oohashi et. al. (The Journal of Neurophysiology 83, 3548, 2000) were intrigued by the same question. It has been recognized that many musical instruments have ultrasonic components including trumpets, violins, the human voice and especially cymbals. Could missing such high-frequency components be that important? An experiment was designed in which an audience was to judge the quality of a digital recording of music having ultrasonic components naturally in it with the same music when the ultrasound was removed. To mitigate purely subjective effects and biases, brain responses were also recorded, specifically the electroencephalogram (EEG) as well asPET imaging. Ultrasound, by definition, is composed of frequencies beyond the range of hearing; however when an ultrasonic musical component was present in combination with the conventional musical frequencies the listeners rated the music more pleasurable which was verified by robust changes in the EEG and brain images. The ultrasonic components of many instruments must be affecting our hearing, but how?

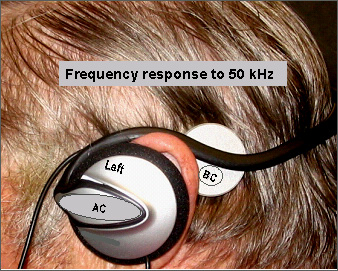

About two decades ago I rediscovered the phenomenon that humans can actually hear ultrasound, as long as it was vibration applied to the skin of the head or neck. Ultrasound was audible in this fashion to older listeners with age-related hearing loss (presbycusis) and even some severely deafened individuals. The ultrasound could act as a carrier to even get speech information into a severely damaged ear and the ultrasonic hearing aid was developed and commercialized (HiSonicTM). There are two mechanisms of audible ultrasonic perception, one by bone conduction and the other by the much lesser-known fluid conduction path. With bone conduction the head is coupled to a vibrating object and the vibrations are passed into the cochlea. However if the vibrating object is placed on the soft tissue of the neck the vibrations pass via fluid (blood, cerebral spinal fluid etc) into the fluid channels of the ear to activate the nerve.

The head is thus a barrier to ultrasound, unless a vibrator is coupled directly to it and only to the point when ultrasound is strong enough to set the head into vibration. Moreover the head is even more inhospitable to airborne ultrasound propagating in the air. So how does the airborne ultrasonic music get to the ear? The eardrum is virtually motionless at ultrasonic frequencies; hence the normal mode of hearing is not likely the answer. The other possible mechanism is tissue or fluid conduction and the only possible sites of fluid coupling to the inner ears are through the eyes.

The possible role of the eye as an acoustic window into the brain and subsequently the ear was assessed by delivering a narrow beam of airborne ultrasound to the closed eye and recording the response of the skull and brain using sensors placed on the head. Airborne ultrasound passed through the eyes and vibrated the brain as recorded from sensors on the head. If the beam was aimed at the forehead or the ear canal, no response was recorded. Further if the subject wore goggles again the brain was not set into ultrasonic vibration.

At this point the story is seemingly complete except for one important detail. The ultrasound levels in "live" music are generally not perceptual. In fact Oohashi et al., (2000) reported that his subjects did not detect the isolated ultrasonic components of music. It was only when the full range of frequencies with the ultrasound present did the brain respond vigorously. How could something not be detectable by itself have such a profound influence on behavior and physiology?

It has been known for some time that people match the pitch of ultrasound with tones in the 8-16 kHz range, that is, ultrasound "sounds" like conventional high audio. Ultrasonic noise will mask only high audio frequencies in the same range as the pitch match. Somehow ultrasound is converted or mapped onto that part of the inner ear that codes high audio frequencies. One explanation, based on acoustic modeling, is that the brain is set into oscillation and its fundamental oscillation frequency, varying by the geometry of the head, is about 15 kHz in most people. Thus ultrasound, which not detectable by itself in music, will reinforce frequencies present in the band of 8-16 kHz, resulting in an increased treble perception which presumably is the basis of sense of pleasantness.

To obtain the "ultrasonic experience" without "live" music, the ultrasonic musical components must be recorded with wide-range microphones and sampled at a high rate (96 kHz+) and played through a high frequency system. The brain is involved in two ways, passively as a mechanical sound-delivery system to the ears and actively, as the more familiar cognitive listening organ.