As with other physical systems and structures, biological behavior is organized or patterned in space and time. These patterns form the basis for the coordination that occurs ubiquitously during behavioral interaction. In animals, such coordination may be intentional or not: two people walking side-by-side rapidly fall into step, exhibiting unintentional coordination or entrainment. Marching bands and soldiers on parade elegantly demonstrate intentional coordination or synchronization of the same basic walking behavior. Characterizing these two levels of coordination and their interrelation may be fundamental to understanding the development and interactive use of skilled human behaviors such as language and music.

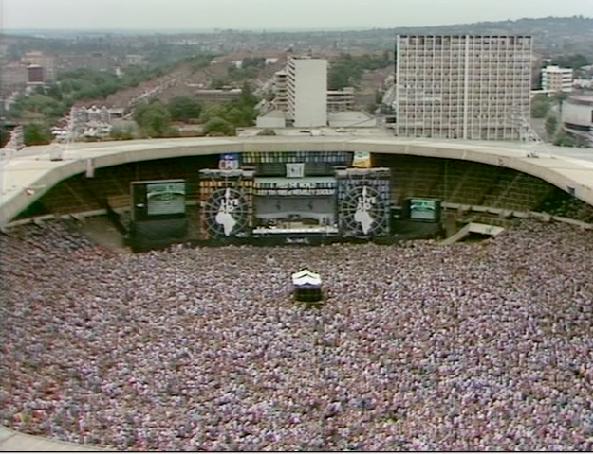

In this study, we assess the coordination of behavioral interactions using an algorithm that quantifies the spatial and temporal correspondence between measured behaviors as they vary through time [1](Barbosa, Yehia, and Vatikiotis-Bateson 2008). The corresponding events of interest may be concurrent or sequential. Choral singing and the simultaneous use of speech and physical gestures exemplify concurrent coordination, while linguistic discourse, on a good day, exemplifies sequential coordination. This study examines both types of coordination in a musical context by computing the time-varying coordination between a musical performer and a large audience. Figure 1 shows the venue for the Queen Live Aid concert held at Wembley Stadium (UK) in 1985. Queen's lead singer, Freddie Mercury, was masterful in his use of physical gestures and verbal commands to shape and adapt the participation of large audiences (72,000 people in this case) within the span of a single song (see video clip "Queen Intro").

WATCH VIDEO: Queen Intro (Quicktime)

|

| Figure 1. View of audience and stage at Queen Live Aid 1985, Wembley, UK. Note the relatively small monitor screens to either side of the stage area. |

Coordination of performer and audience

At a simple level of interpretation, coordination through time may be inferred from the correlation of two signals representing time-varying behavior. Two songs were analyzed. In the first song (see video clip Gaga), the audience participates simultaneously with the performer, primarily through visible synchronization of arm gestures. In the second song (see video clip Day-o), where the performer sings a phrase and the audience repeats it, we apply the same algorithm to examine coordination acoustically. In the interest of space, only the coordination between the visible motions of the singer and the audience, exhibited in the first song, is discussed in this overview.

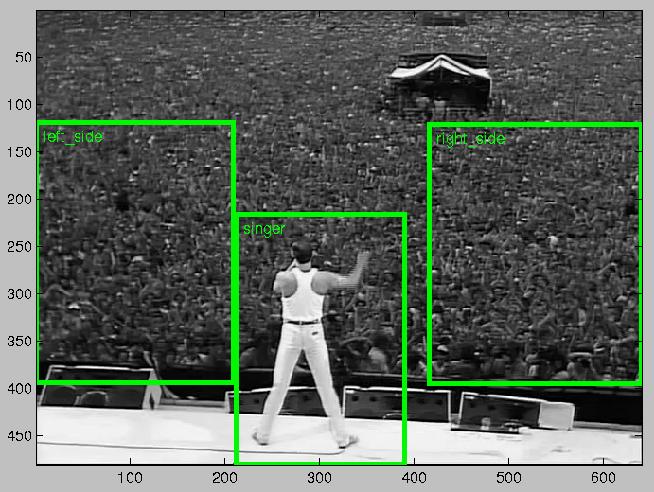

Visible 2-D motions of the singer and audience are recovered from the video image sequence using optical flow [2]. Optical flow calculates the amplitude and direction of motion for each pixel in the image by finding the difference between successive images. The amplitudes for all pixels within specific regions of interest (See Fig. 2) are summed for each time step in the image sequence, and then are compared to the time-varying sums for other regions.

|

| Figure 2. Analysis windows used to calculate singer -audience coordination of arm motion. The audience is divided into left and right sides so that its internal coordination can be assessed. |

In particular, we want to know not only how well-synchronized the audience is with the singer, but also the extent to which it is synchronized with itself. As with schooling fish and flocking birds, we expect the audience to be better synchronized with itself for the simple reason that individuals comprising the audience are in closer contact (physically and visually, if not aurally) with other audience members than with the singer, even if he is leading them. Comparison of Figures 3-4 lends support to our expectation.

|

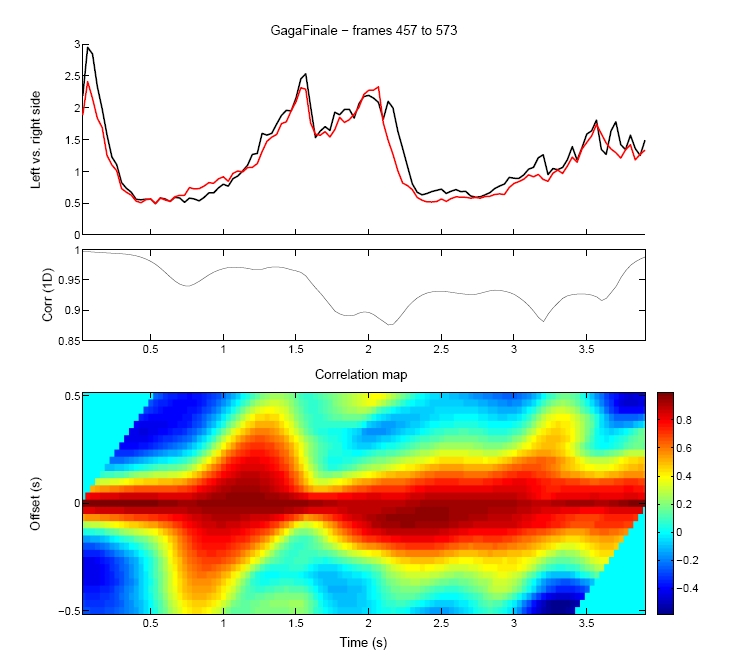

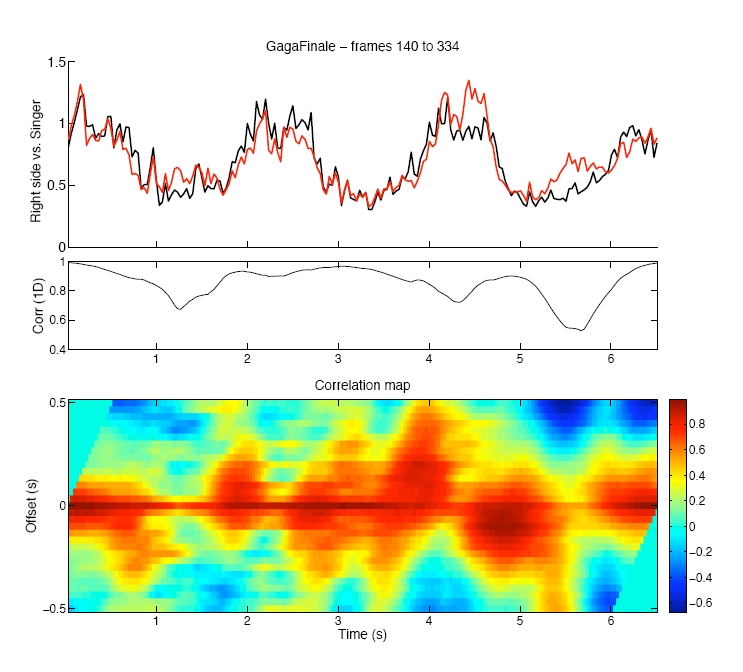

| Figure 3. Quantification of coordination between two sections of audience on either side of the singer (See Fig. 2). The overlay (top panel) of the summed motion amplitudes (vertical axis) for the two sections over several seconds (horizontal axis) confirms that different sections of the audience are moving in near synchrony. This is supported by the high instantaneous correlation calculated for the two regions (middle panel). By comparison, as shown in Figure 4, the coordination between the audience and the singer is also strong, but shows greater divergence in the overlaid amplitudes (top) and larger fluctuations in the instantaneous correlation (middle). |

|

| Figure 4. Coordination computed between the singer and the audience to his right (see Fig. 2). Top: Time-varying sums for the singer and audience are overlaid. Middle: Instantaneous correlation at zero temporal offset. Bottom: Instantaneous correlation plotted through time as a function of temporal offset of the two signals (vertical axis). Color denotes correlation values: red is positive, blue is negative. |

Thus far we have discussed only the coordination of signals where pairs of values are compared at the same moment in time. This is not sufficient since concurrent coordination generally entails some degree of temporal offset and fluctuations, which themselves may be integral to the coordination structure. Even highly skilled execution of a musical score shows temporal fluctuations, where strict synchrony would degrade the perceived quality of the music by making it sound mechanical or too precise. In order to incorporate fixed and variable temporal offsets in the coordination analysis, our algorithm computes instantaneous correlation across a range of temporal offsets. This is shown in the bottom panels of Figures 3-4 where temporal offset before and after zero-offset is shown on the vertical axis and correlation value is denoted by color. Although the visualization, as shown in the figures, is not easy to interpret qualitatively, we can now quantify coordination of behavior regardless of either fixed or variable temporal offsets.

Summary

The examination of coordination conducted in this study shows that within the span of a single 3-4 minute song, a skilled performer can achieve a high degree of coordination with a large audience. The analysis further reveals that the coordination itself follows a time course: improving rapidly early on, peaking about half-way through the song, and then actually declining somewhat in the last minute. Is this all due to the skill of the performer? We think not; equally crucial to the success of the observed synchronization is the skill of the audience, which has extensive knowledge of the music prior to the performance and likely has prior experience with large venue events.

References

[1] A. V. Barbosa, H. C. Yehia, and E. Vatikiotis-Bateson," Algorithm for computing spatiotem-

poral coordination," in Proceedings of the International Conference on Auditory-Visual Speech

Processing-AVSP 2008, Tangalooma, Australia, September 2008.

[2] B. K. P. Horn and B. G. Schunck, "Determining optical Flow," Articial Intelligence, vol. 17,

pp. 185-203, 1981.6