3pID2 – Yanny or Laurel? Acoustic and non-acoustic cues that influence speech perception

Brian B. Monson, monson@illinois.edu

Speech and Hearing Science

University of Illinois at Urbana-Champaign

901 S Sixth St

Champaign, IL 61820

USA

Popular version of paper 3pID2, “Yanny or Laurel? Acoustic and non-acoustic cues that influence speech perception”

Presented Wednesday afternoon, November 7, 1:25-1:45pm, Crystal Ballroom FE

176th ASA Meeting, Victoria, Canada

“What do you hear?” This question that divided the masses earlier this year highlights the complex nature of speech perception, and, more generally, each individual’s perception of the world. From the yanny v. laurel phenomenon, it should be clear that what we perceive is dependent not only upon the physics of the world around us, but also upon our individual anatomy and individual life experiences. For speech, this means our perception can be influenced greatly by individual differences in auditory anatomy, physiology, and function, but also by factors that may at first seem unrelated to speech.

In our research, we are learning that one’s ability (or inability) to hear at extended high frequencies can have substantial influence over one’s performance in common speech perception tasks. These findings are striking because it has long been presumed that extended high-frequency hearing is not terribly useful for speech perception.

Extended high-frequency hearing is defined as the ability to hear at frequencies beyond 8,000 Hz. These are the highest audible frequencies for humans, are not typically assessed during standard hearing exams, and are believed to be of little consequence when it comes to speech. Notably, sensitivity to these frequencies is the first thing to go in most forms of hearing loss, and age-related extended high-frequency hearing loss begins early in life for nearly everyone. (This is why the infamous “mosquito tone” ringtones are audible to most teenagers but inaudible to most adults.)

Previous research from our lab and others has revealed that a surprising amount of speech information resides in the highest audible frequency range for humans, including information about the location of a speech source, the consonants and vowels being spoken, and the sex of the talker. Most recently, we ran two experiments assessing what happens when we simulate extended high-frequency hearing loss. We found that one’s ability to detect the head orientation of talker is diminished without extended high frequencies. Why might that be important? Knowing a talker’s head orientation (i.e., “Is this person facing me or facing away from me?”) helps to answer the question of whether a spoken message is intended for you or someone else. Relatedly, and most surprisingly, we found that restricting access to the extended high frequencies diminishes one’s ability to overcome the “cocktail party” problem. That is, extended high-frequency hearing improves one’s ability to “tune in” to a specific talker of interest when many interfering talkers are talking simultaneously, as when attending a cocktail party or other noisy gathering. Do you seem to have a harder time understanding speech at a cocktail party than you used to? Are you middle-aged? It may be that the typical age-related hearing loss at extended high frequencies is contributing to this problem. Our hope is that assessment of hearing at extended high frequencies will become standard routine for audiological exams. This would allow us to determine the severity of extended high-frequency hearing loss in the population and whether some techniques (e.g., hearing aids) could be used to address it.

Figure 1. Spectrographic representation of the phrase “Oh, say, can you see by the dawn’s early light.” While the majority of energy in speech lies below about 6,000 Hz (dotted line), extended high-frequency (EHF) energy beyond 8,000 Hz is audible and assists with speech detection and comprehension.

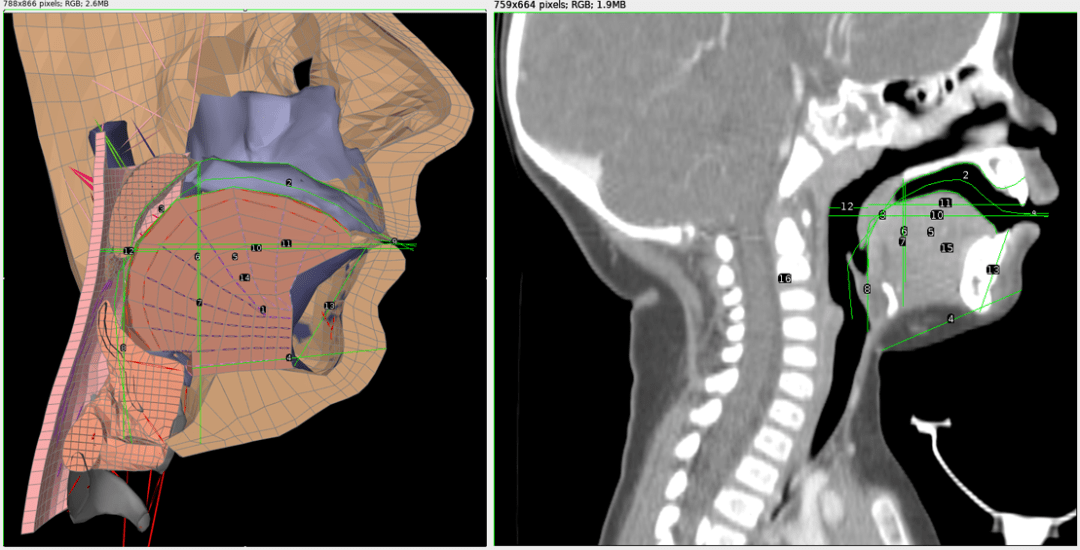

Figure 1: Left: A cross-section of the Frank model of an adult vocal tract with measurement lines. Right: A cross-sectional CT scan image of an 11 month old infant with measurement lines. The relative proportions of each vocal tract were compared to generate the infant model.

Figure 1: Left: A cross-section of the Frank model of an adult vocal tract with measurement lines. Right: A cross-sectional CT scan image of an 11 month old infant with measurement lines. The relative proportions of each vocal tract were compared to generate the infant model. Figure 2: A modified Frank vocal tract conforming to infant proportions.

Figure 2: A modified Frank vocal tract conforming to infant proportions.