4pSC15 – Reading aloud in a clear speaking style may interfere with sentence recognition memory

Sandie Keerstock – keerstock@utexas.edu

Rajka Smiljanic – rajka@austin.utexas.edu

Department of Linguistics, The University of Texas at Austin

305 E 23rd Street, B5100, Austin, TX 78712

Popular version of paper 4pSC15

Presented Thursday afternoon, May 16, 2019

177th ASA Meeting, Louisville, KY

Can you improve your memory by speaking clearly? If, for example, you are rehearsing for a presentation, what speaking style will better enhance your memory of the material: reading aloud in a clear speaking style, or reciting the words casually, as if speaking with a friend?

When conversing with a non-native listener or someone with a hearing problem, talkers spontaneously switch to clear speech: they slow down, speak louder, use a wider pitch range, and hyper-articulate their words. Compared to more casual speech, clear speech enhances a listener’s ability to understand speech in a noisy environment. Listeners also better recognize previously heard sentences and recall what was said if the information was spoken clearly.

Figure 1. Illustration of the procedure of the recognition memory task.

In this study, we set out to examine whether talkers, too, have better memory of what they said if they pronounced it clearly.In the training phase of the experiment, 60 native and 30 non-native English speakers were instructed to read aloud and memorize 60 sentences containing high-frequency words, such as “The hot sun warmed the ground,” as they were presented one by one on a screen. Each screen directed the subject with regard to speaking style, alternating between “clear” and “casual” every ten slides. During the test phase, they were asked to identify as “old” or “new” 120 sentences written on the screen one at a time: 60 they had read aloud in either style, and 60 they had not.

Figure 2. Average of d’ (discrimination sensitivity index) for native (n=60) and non-native English speakers (n=30) for sentences produced in clear (light blue) and casual (dark blue) speaking styles. Higher d’ scores denote enhanced accuracy during the recognition memory task. Error bars represent standard error.

Unexpectedly, both native and non-native talkers in this experiment showed enhanced recognition memory for sentences they read aloud in a casual style. Unlike in perception, where hearing clearly spoken sentences improved listeners’ memory, findings from the present study tend to indicate a memory cost when talkers themselves produced clear sentences. This asymmetry between the production and perception effect on memory may be related to the same underlying mechanism, namely the Effortfulness Hypothesis (McCoy et al. 2005). In perception, more cognitive resources are used during processing of more-difficult-to-understand casual speech and fewer resources remain available for storing information in memory. Conversely, cognitive resources may be more depleted during the production of hyper-articulated clear sentences, which could lead to poorer memory encoding. This study suggests that the benefit of clear speech may be limited to the retention of spoken information in long-term memory of listeners, but not talkers.

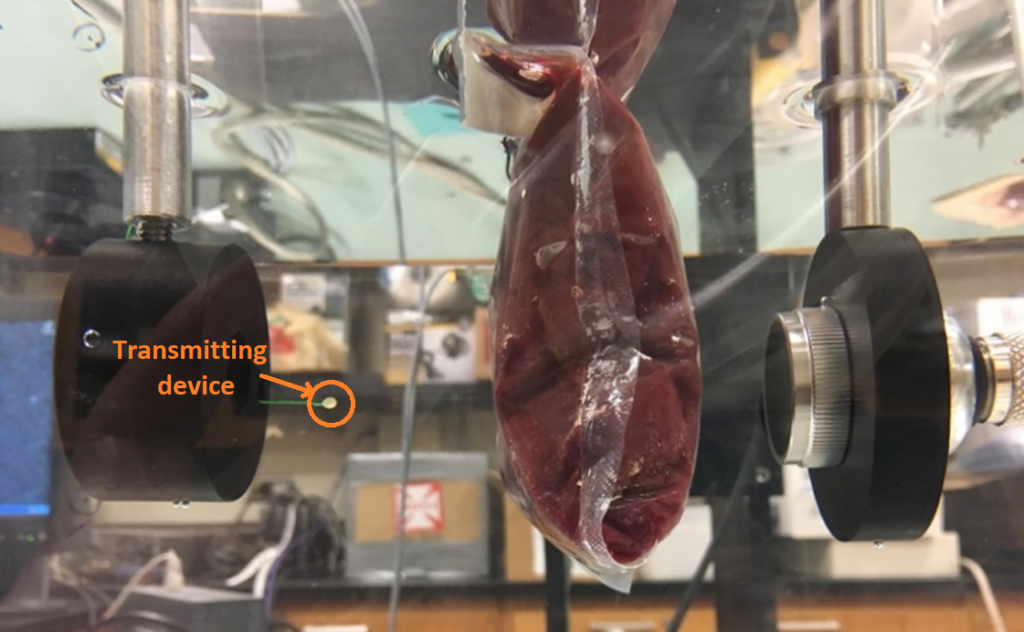

Figure 1: Fabricated prototype of origami folded frequency selective surface made of a folded plastic sheet and copper prints, ready to be tested in an anechoic chamber – a room padded with radio-wave-absorbing foam pyramids.

Figure 1: Fabricated prototype of origami folded frequency selective surface made of a folded plastic sheet and copper prints, ready to be tested in an anechoic chamber – a room padded with radio-wave-absorbing foam pyramids.