4aMU1 – Are phantom partials produced in piano strings?

Thomas Moore – tmoore@rollins.edu

Lauren Neldner – lneldner@rollins.edu

Eric Rokni – erokni@rollins.edu

Department of Physics

Rollins College

1000 Holt Ave – 2743

Winter Park, FL 32789

Popular version of paper 4aMU1, “Are phantom partials produced in piano strings?”

Presented Thursday morning, November 8, 2018, 8:55-9:10 AM, Crystal Ballroom (FE)

176th ASA Meeting, Victoria, BC

The unique sound of the piano, or any stringed musical instrument, begins with the vibrating string. The string vibrations produce many different musical pitches simultaneously, most of which are harmonics of the note being played. The final sound depends both on the relative power in each of the harmonics in the string, as well as how efficiently these sounds are transferred to the air. This type of arrangement, where there is a source of the sound (the strings) and a mechanism to transmit the sound to the air (the wooden parts of a piano) is often referred to as a source-filter system. The vibrations from the string are said to be filtered through the bridge and soundboard because these wooden components do not transmit every pitch equally efficiently. The wood can change the balance of the sound created by the string, but it cannot add new sounds.

The work reported in this presentations shows that this idea of how the piano works is flawed. Experiments have shown that the wooden parts of the piano can produce sounds that are not created in the string. That is, the wood can be a source of sound as well as the string, and it is not always simply a filter. The sound originating in the wood occurs at frequencies that are sums and differences of the frequencies found in the vibrations of the string, but they are created in the wood not the string.

These anomalous components in the sound from a piano, commonly referred to as phantom partials, were first reported in 1944,1 and work over the following 70 years resulted in the conclusion that they originate in the stretching of the string as it vibrates.2,3 Therefore, the source of all of the sound from a piano is still considered to be the string. This idea has been incorporated into the most complex computer models of the piano, which may eventually be used to study the effects of changing the piano design without having to build a new piano to determine if the change is desirable.

The commonly accepted idea that phantom partials can originate in the string is not wrong – some of the phantom is created by the string motion. However, the work reported in this presentation shows that only a small part of the power in the phantom partials comes from the string. Much more of the phantom partial is created in the wood. This has implications for those trying to build computer models of the piano, as well as those trying to understand the difference between a good piano and a truly great one.

Before this new information can be included in the latest computer models, the process that creates phantom partials in the wood must be understood. The next step is to develop a theory that can describe the process, and test the theory against further experiments. But the idea that the piano is merely a source-filter system will have to be abandoned if we are to understand this wonderful and ubiquitous musical instrument.

1) A. F. Knoblaugh, “The clang tone of the piano forte,” J. Acoust. Soc. Am. 128, 102 (1944).

2) H. A. Conklin, “Generation of partials due to nonlinear mixing in a stringed instrument,” J. Acoust. Soc. Am. 105, 536-545 (1999).

3) N. Etchenique, S. R. Collin, and T. R. Moore, “Coupling of transverse and longitudinal waves in piano strings,” J. Acoust. Soc. Am. 137, 1766-1771 (2015).

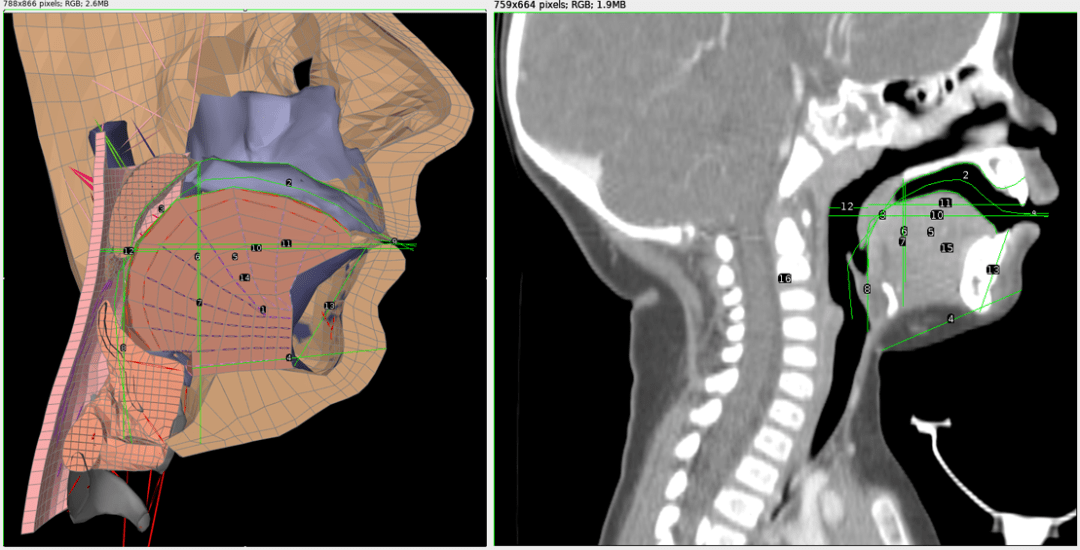

Figure 1: Left: A cross-section of the Frank model of an adult vocal tract with measurement lines. Right: A cross-sectional CT scan image of an 11 month old infant with measurement lines. The relative proportions of each vocal tract were compared to generate the infant model.

Figure 1: Left: A cross-section of the Frank model of an adult vocal tract with measurement lines. Right: A cross-sectional CT scan image of an 11 month old infant with measurement lines. The relative proportions of each vocal tract were compared to generate the infant model. Figure 2: A modified Frank vocal tract conforming to infant proportions.

Figure 2: A modified Frank vocal tract conforming to infant proportions.