3pBA4 – Artificial Intelligence for Automatic Tracking of the Tongue in Real-time Ultrasound Data

M. Hamed Mozaffari – mmoza102@uottawa.ca

Won-Sook Lee – wslee@uottawa.ca

School of Electrical Engineering and Computer Science (EECS)

University of Ottawa

800 King Edward Avenue

Ottawa, Ontario, Canada K1N 6N5

David Sankoff – sankoff@uottwa.ca

Department of Mathematics and Statistics

University of Ottawa

150 Louis Pasteur Pvt.

Ottawa, Ontario K1N 6N5

Popular version of papers 3pBA4

Presented Wednesday afternoon, December 4, 2019

178th ASA Meeting, San Diego, CA

Medical ultrasound technology has been a well-known method in speech research for studying of tongue motion and speech articulation. The popularity of ultrasound imaging for tongue visualization is because of its attractive characteristics such as imaging at a reasonably rapid frame rate, which allows researchers to visualize subtle and swift gestures of the tongue during the speech in real-time. Moreover, ultrasound technology is relatively affordable, portable and clinically safe with a non-invasive nature.

Exploiting the dynamic nature of speech data from ultrasound tongue image sequences might provide valuable information for linguistics researchers, and it is of great interest in many recent studies. Ultrasound imaging has been utilized for tongue motion analysis in the treatment of speech sound disorders, comparing healthy and impaired speech production, second language training and rehabilitation, to name a few.

During speech data acquisition, an ultrasound probe under the user’s jaw pictures tongue surface in midsagittal or coronal view in real-time. Tongue dorsum can be seen in this view as a thick, long, bright, and continues region due to the tissue-air reflection of ultrasound signal by the air around the tongue. Due to the noise characteristic of ultrasound images with the low-contrast property, it is not an easy task for non-expert users to localize the tongue surface.

Picture 1: An illustration of the human head and tongue mid-sagittal cross-section view. The tongue surface in ultrasound data can be specified using a guide curve. Highlighted lines (red and yellow) can help users to track the tongue in real-time easier.

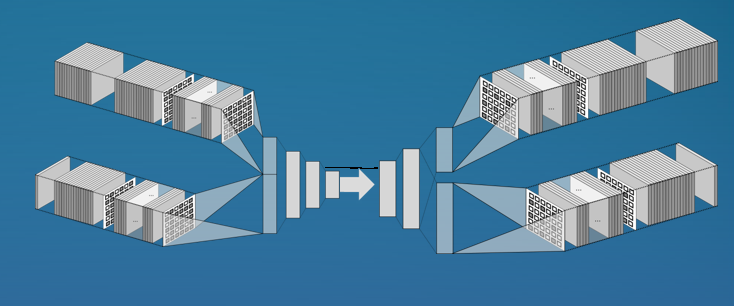

To address this difficulty, we proposed a novel artificial intelligence method (named BowNet) for tracking the tongue surface in real-time for non-expert users. Using BowNet, users can see a highlighted version of their tongue surface in real-time during a speech without any training. This idea of tracking tongue using a contour facilitates linguistics to use the BowNet technique for their quantitative studies.

Performance of BowNet in terms of accuracy and automation is significant in comparison with similar methods as well as the capability of applying on different ultrasound data types. The real-time performance of the BowNet enables researchers to propose new second language training methods. The better performance of BowNet techniques is presented in Video 1.

Video1: A performance presentation of BowNet models in comparison to similar recent ideas. Better generalization over different datasets, less noise, and better tongue tracking can be seen. Failure cases with colour are indicated in video.

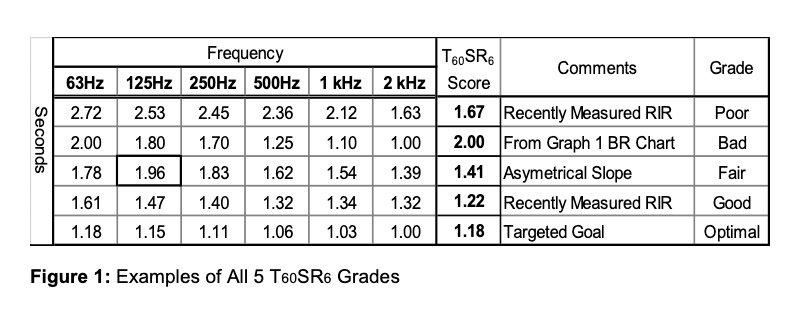

These modern applications would benefit from an optimal T60SR6 grade:

These modern applications would benefit from an optimal T60SR6 grade: