1aNS6 – Weather or not: Meteorology affects presence of shock waves in fighter aircraft noise

Kent Gee – kentgee@byu.edu

Brent Reichman – brent.reichman@gmail.com

Brigham Young University

N283 Eyring Science Center

Provo, UT 84602

Alan Wall – alan.wall.4@us.af.mil

Air Force Research Laboratory

2610 Seventh Street, Bldg 441

Wright-Patterson Air Force Base, OH 45433

Popular version of paper 1aNS6 Meteorological effects on long-range nonlinear propagation of jet noise from a static, high-performance military aircraft

Presented Monday morning, Nov. 5, 2018

176th ASA Meeting, Victoria, British Columbia

Read the article in Proceedings of Meetings on Acoustics

The sound of a fighter jet aircraft as it takes off or flies by at high power is unique, in part, because of “crackle.” Crackle, described as sounding like tearing paper or static from a poorly connected loudspeaker, is considered an annoying and dominant part of the overall aircraft noise. Crackle can be heard several times in this YouTube video of an F-35 at the Paris Air Show.

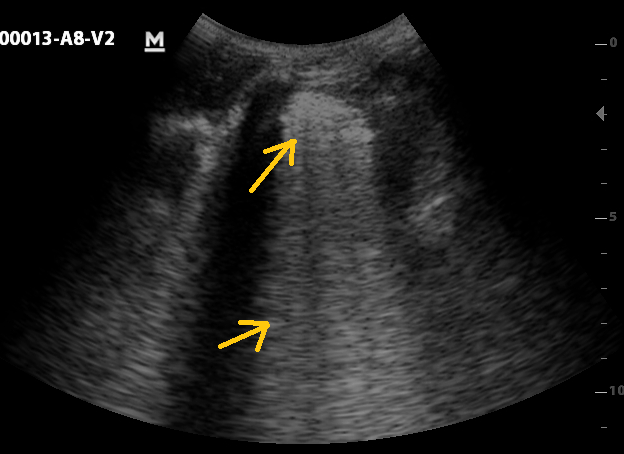

What causes crackle? This distinct sound quality is caused by shock waves being embedded in the noise radiated from the high-thrust exhaust, which leaves the jet engine traveling much faster than the speed of sound. These supersonic jet plumes create intense noise levels, such that the noise travels nonlinearly and the speed of sound is no longer independent of sound amplitude. Faster-traveling, high-pressure parts of the compressive sound wave overtake slower-traveling, low-pressure parts of the wave, creating shock waves that form more readily with increasing sound level. The below animation shows the formation of discontinuous shock waves as part of nonlinear propagation, and the accompanying sound files reveal how nonlinear propagation and shock formation turn a smooth-sounding noise signal into one that contains significant crackle.

This paper describes how shock waves in jet aircraft noise can be affected by local weather changes. As the shocks travel through the atmosphere, they slowly dissipate. But, the wind and temperature profiles near the ground can greatly change shock characteristics and, therefore, the crackle heard from a military jet aircraft. Recent noise measurements of tied-down F-35 aircraft help to describe these changes. The noise recordings were made at microphones at several distances and angles, but as far as 4000 ft away from the aircraft. While measurements made short distances from the aircraft show only small differences in shock characteristics with changing temperature and wind, data collected at greater distances than 1000 ft reveal much greater sensitivity to local meteorology. At 2000 and 4000 ft, cranes were used to elevate microphones as high as 100 ft in the air, and the recordings showed something surprising. Not only did the sound levels and shock characteristics vary greatly with height, but in some cases the shock content increased with decreasing sound level. This finding conflicts with the usual behavior of nonlinear sound waves.

These results show that nonlinear propagation of jet noise through real atmospheric conditions is more complex than previously anticipated. More broadly, this study also points to the need to investigate in greater detail how the shock waves and crackle present in jet noise can be impacted by small weather changes. Improved predictions of received noise, including crackle, will help military bases plan aircraft operations to minimize community impact.

Acknowledgment: These measurements were funded by the F-35 Joint Program Office. Distribution A: Approved for public release; distribution unlimited. Cleared 11/2/2018; JSF18-1022.