2aSC3 – Studying Vocal Fold Non-Stationary Behavior during Connected Speech Using High-Speed Videoendoscopy

Maryam Naghibolhosseini – naghib@msu.edu

Dimitar D. Deliyski – ddd@msu.edu

Department of Communicative Sciences and Disorders, Michigan State University

1026 Red Cedar Rd.

East Lansing, MI 48824

Stephanie R.C. Zacharias – Zacharias.Stephanie@mayo.edu

Department of Otolaryngology Head & Neck Surgery, Mayo Clinic

13400 E Shea Blvd.

Scottsdale, AZ 85259

Alessandro de Alarcon – alessandro.dealarcon@cchmc.org

Division of Pediatric Otolaryngology, Cincinnati Children’s Hospital Medical Center

3333 Burnet Ave

Cincinnati, OH 45229

Robert F. Orlikoff – orlikoffr16@ecu.edu

College of Allied Health Sciences, East Carolina University

2150 West 5th St.

Greenville, NC 27834

Popular version of paper 2aSC3

Presented Tuesday morning, Nov 6, 2018

176th ASA Meeting, Victoria, BC, Canada

You would feel the vibrations of your vocal folds when you place your hand on your neck while saying /a/. The vocal fold vibratory behavior can be studied to learn about the voice production mechanisms. Better understanding of the voice production in norm and disorder could be helpful to improve voice assessment and treatment strategies. One of the techniques to study the vocal fold function is laryngeal imaging. The most sophisticated tool for laryngeal imaging is high-speed videoendoscopy (HSV), which enables us to record vocal fold vibrations with high temporal resolution (thousands of frames per second, fps). The recent advancement of coupling HSV systems with flexible nasolaryngoscopes has provided us the unique opportunity of recording the vocal fold vibrations during connected speech for the first time.

In this study, HSV data were obtained from a vocally normal 38 year old female during reading of the “Rainbow Passage” using a custom-built HSV system at 4,000 fps. This frame rate leads to the recording length of 29.14 seconds (total of 116,543 frames). The following video shows one second of the recorded HSV with playback speed of 30 fps.

Video1

The HSV dataset is large and it will take about 32 hours to just look at the data if you spend 1 second per image frame! You can imagine with this large dataset, the manual analysis of the data is not doable and automated computerized methods are required. The goal of this research project is to develop automatic algorithms for the analysis of HSV in running speech to extract meaningful information about the vocal fold function. How the vibration of the vocal folds starts and how it ends during phonation are critical factors in studying the pathophysiology of voice disorders. Hence, in this project, the onset and offset of phonation that have non-stationary behavior are studied.

We have developed the following automated algorithms: temporal segmentation, motion compensation, spatial segmentation, and onset/offset measurements. The temporal segmentation algorithm was able to determine the onset and offset timestamps of phonation. To do so, the glottal area (the dark area between the vocal folds) waveform was measured. The area change is due to the vibrations of the vocal folds. This waveform can be converted to an acoustic signal that we can listen to. In the following video, you can follow the “Rainbow Passage” text while listening to the extracted audio from the glottal area waveform. It should be noted that this audio signal was merely extracted from the HSV images and no acoustic signal was recorded from the subject.

Video 2

A motion compensation algorithm was developed to align the vocal folds across frames to overcome the laryngeal tissue maneuvers during connected speech. You may see in the following video that after the motion compensation, the vocal folds location is almost the same across frames in the cropped frame.

Video 3

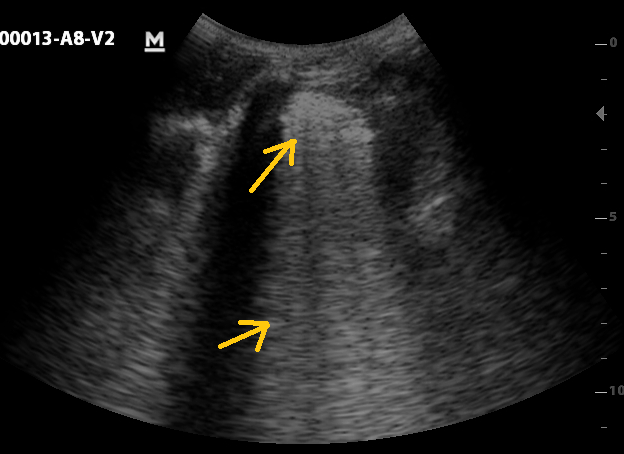

The spatial segmentation was performed to extract the edges of the vibrating vocal folds from HSV kymograms. The kymograms were extracted by passing a line in the medial section of the frames to capture the vocal fold vibrations over this line in time. An active contour modeling approach was applied to the HSV kymograms of each vocalized segment to provide an analytic description of the vocal fold edges across the frames. You can see the result of spatial segmentation for one vocalization in the following figure.

Figure 1

The glottal attack time (the time difference between the first vocal fold oscillation to first contact), offset time (the time difference between the last vocal fold contact to last oscillation), amplification ratio, and damping ratio were measured from the spatially segmented kymogram, shown in the figure. The amplification ratio shows how the oscillation grows at the beginning of phonation and the damping ratio quantifies how the oscillation dies at the offset of phonation. These measures are beneficial to describe the laryngeal dynamics of voice production.