2aNSa2 – The effect of transportation noise on sleep and health

Jo M. Solet Joanne_Solet@HMS.Harvard.edu

Harvard Medical School and Cambridge Health Alliance

Assistant Professor of Medicine

15 Berkeley St, Cambridge, MA 02138

617 461 9006

Bonnie Schnitta bonnie@soundsense.com

SoundSense, LLC

Founder and CEO

PO Box 1360, 39 Industrial Road, Wainscott, NY 11975

631 537 4220

Popular version of paper 2aNSa2

Presented Tuesday morning, December 3, 2019

178th ASA Meeting, San Diego, CA

As transportation noise continues to rise, social justice issues are being raised over the impacts on sleep, health, safety and well being.

The Federal Government, through the Federal Aviation Administration (FAA), is solely responsible for managing the National Airspace System, including flight paths and altitudes. The development of satellite based GPS “air navigation” or RNAV, introduced as a replacement for ground-based radar tracking, has allowed for flights at lower altitudes and at closer time intervals. It has also led to a consolidation of formerly more disbursed flight paths, producing a “super-highway” of flights over defined areas. The resulting noise levels impact concentration, communication and learning during the day and disrupt sleep at night.

Efforts to track and to force disbursal of these consolidated flight paths is underway. However, the government mandated statistics made available to the public, including day/night sound pressure level averages, fail to illuminate the peak exposure levels and timing. Additionally, statistics are reported in A-weighted metrics only, which deemphasizes low frequency sound components.

Some airports offer a “noise complaint hotline”. At Logan Airport in Boston, this hotline is not manned by a living person at night. Complainants may receive a letter several weeks after their call registering the receipt and content of the complaint. However, gauging noise impact levels by timing and/or number of complaints has serious flaws. Among these, sleep scientists are aware that subjects aroused from sleep by noise do not have full memory systems up and running. By morning, residents may be aware of having slept poorly, but be unable to report what aroused them or how often. The documented effects of inadequate sleep include increased likelihood of crashes, industrial accidents, falls, inflammation, pain, weight gain, diabetes and heart disease. Sleep disruption by noise is not simply “annoyance”.

Breakthrough research from Harvard Medical School sleep scientists, Jo M. Solet, Orfeu M. Buxton, and colleagues, quantified arousals from sleep by administering a series of noise source recordings at rising decibels to subjects in the sleep lab. This work demonstrated individual differences among sleepers as well as enhanced protection from arousal by noise in the deepest stages of sleep. Deep sleep is known to decrease dramatically with age; ours is an aging population.

There is now also preliminary evidence though the work of medical doctor, Carter Sigmon, and acoustical engineering leader, Bonnie Schnitta, suggesting that certain diagnoses, for example, PTSD, low thyroid function, and atrial fibrillation, carry extra vulnerabilities to noise exposure.

Acoustics experts, sleep scientists and public health advocates are working to inform policy change to protect our residents. This year two bills have been filed to require a National Academy of Medicine Consensus Report: HR 976, Air Traffic Noise and Pollution Expert Consensus Act by Congressman Stephen Lynch, and S2506, A Bill to Require a Study on the Health Impacts of Air Traffic Noise and Pollution by Senator Elizabeth Warren, both from Massachusetts.

See: https://www.congress.gov/bill/116th-congress/house-bill/976/all-info?r=27&s=1

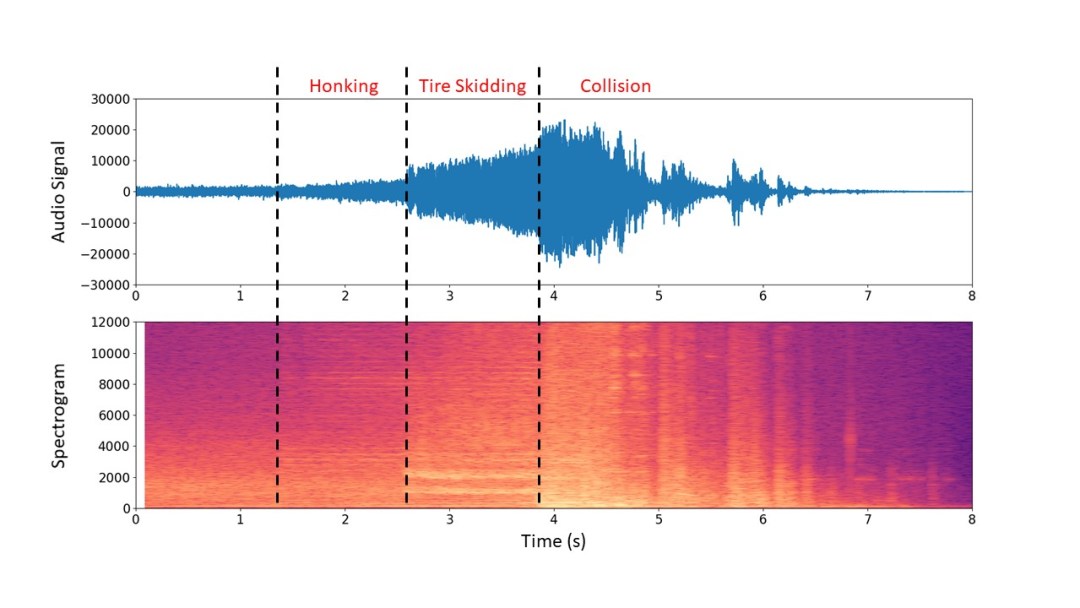

2 images from presentation pasted below: [IMAGES MISSING]