4aPPa4 – Perception of Vowels and Consonants in Cochlear Implant Users

Melissa Malinasky – Melissa_Malinasky@rush.edu

Popular version of paper 4aPPa4

Presented Thursday morning, December 10 , 2020

179th ASA Meeting, Acoustics Virtually Everywhere

Understanding individual phoneme sounds is vital to speech perception. While cochlear implants (CI) can improve speech understanding, they also introduce distortions to sound input due to the limits of technology versus the human ear. Understanding which phonemes are most commonly misunderstood, and in what context this occurs can lead to the development of better signal processing strategies in Cis and better audiologic rehabilitation strategies post-implantation. The objective of this study was to evaluate perceptual differences in accuracy of specific vowels and consonants in experienced CI users. This study looked at 25 experienced adult CI users that were a part of a larger study by Shafiro et al. Participants were presented with a word, and given closed-set responses that tested their ability to distinguish between individual consonants of vowels. To determine if they can make these distinctions, each multiple-choice response was varied by one phonemic sound (i.e. bad vs bat, hud vs hid).

Cochlear implant users achieved 78% accuracy overall for consonant sounds compared to 97% for normal hearing participants. This shows that CI users are quite successful at identifying individual consonants sounds. Consonants at the beginning of the word were identified with 80.5% accuracy, while consonants at the end of the word were identified with 75.4% accuracy. This is not as great of a variation as we would have predicted.

For correct identification of vowels, cochlear implant users had 75% accuracy, while normal hearing users had 92% accuracy. Vowels were analyzed based on accuracy, as well as other vowels they were confused with. Some vowel sounds had over 80% accuracy, while others had as low as 45%.

Overall, this study shows that CI users have fairly good consonant and vowel recognition. These results are consistent with what has been previously reported by Rodvik et al. (2018). While CI users do perform quite well, they are still outperformed by their normal hearing, age-matched peers. The presence of a single consonant can affect someone’s entire understanding of a word, and it is important to understand where the most difficulty lies for CI users. Improvement in identification of some of these more difficult consonants can give this population greater access to language understanding. These findings can also help tailor auditory training programs, and help improve speech intelligibility in CI users.

References:

Hillenbrand, J., Getty, L. A., Clark, M. J., & Wheeler, K. (1995). Acoustic characteristics of American English vowels. The Journal of the Acoustical Society of America, 97(5), 3099–3111. doi: 10.1121/1.411872

House, A. S., Williams, C. E., Hecker, M. H. L., & Kryter, K. D. (1965). Articulation testing methods: Consonantal differentiation with a closer response set. The Journal of the Acoustical Society of America, 37(1), 158–166. https://doi.org/10.1121/1.1909295

Peterson, G. E., & Barney, H. L. (1952). Control Methods Used in a Study of the Vowels. The Journal of the Acoustical Society of America, 24(2), 175–184. doi: 10.1121/1.1906875

Rødvik AK, von Koss Torkildsen J, Wie OB, Storaker MA, Silvola JT. Consonant and Vowel Identification in Cochlear Implant Users Measured by Nonsense Words: A Systematic Review and Meta-Analysis. J Speech Lang Hear Res. 2018 Apr 17;61(4):1023-1050. doi: 10.1044/2018_JSLHR-H-16-0463. PMID: 29623340.

Shafiro V, Hebb M, Walker C, Oh J, Hsiao Y, Brown K, Sheft S, Li Y, Vasil K, Moberly AC. Development of the Basic Auditory Skills Evaluation Battery for Online Testing of Cochlear Implant Listeners. Am J Audiol. 2020 Sep 18;29(3S):577-590. doi: 10.1044/2020_AJA-19-00083. Epub 2020 Sep 18. PMID: 32946250.

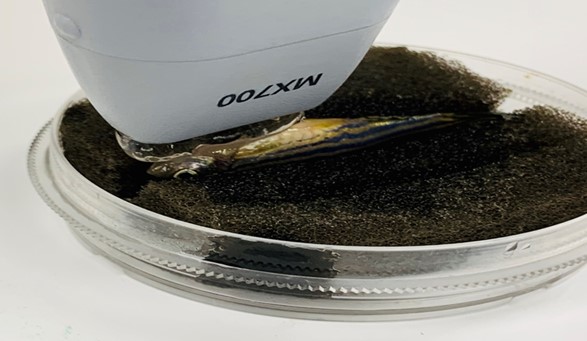

Figure 1. Experiment setup of zebrafish echocardiography.

Figure 1. Experiment setup of zebrafish echocardiography. Figure 2. Experimental setup of zebrafish ultrasound vibro-elastography.

Figure 2. Experimental setup of zebrafish ultrasound vibro-elastography.

Figure 1 – An animation of Speaker 1’s vowel space and how it changes over a period of 50 years. Each colored circle represents a different decade.

Figure 1 – An animation of Speaker 1’s vowel space and how it changes over a period of 50 years. Each colored circle represents a different decade. Figure 2: Average fundamental frequency of our speakers’ speech as they age.

Figure 2: Average fundamental frequency of our speakers’ speech as they age. Figure 3: Average speech rate in syllables per second of our speakers’ speech as they age.

Figure 3: Average speech rate in syllables per second of our speakers’ speech as they age.